Azure Data Factory (ADF) is a cloud-based data integration service provided by Microsoft Azure. It allows users to create, schedule, and orchestrate data workflows that move and transform data from various sources to various destinations.

ADF offers a drag-and-drop interface for building and managing data pipelines, allowing users to easily create data-driven workflows that can integrate with a wide variety of sources, such as SQL Server, Azure Blob Storage, Azure Data Lake Storage, and more. Additionally, ADF offers a range of built-in data transformation and processing activities that can be used to transform data as it moves through the pipeline.

ADF also offers a range of monitoring and management features that allow users to track the performance of their data pipelines and troubleshoot issues. With ADF, users can create complex data pipelines that can handle large volumes of data and ensure data integrity throughout the entire process.

Azure Data Factory Glossary

Here are some key terms and definitions related to Azure Data Factory (ADF):

- Azure Data Factory (ADF): A cloud-based data integration service provided by Microsoft Azure that allows users to create, schedule, and orchestrate data workflows that move and transform data from various sources to various destinations.

- Data Pipeline: A series of data processing and movement activities that are arranged in a specific order to move data from source to destination and perform transformations.

- Data Integration: The process of combining data from different sources into a single, unified view that can be analyzed and used to make decisions.

- Extract, Transform, Load (ETL): A process used to extract data from various sources, transform it into a format that is suitable for analysis, and load it into a destination system.

- Data Transformation: The process of converting data from one format to another to make it more usable and relevant for analysis.

- Linked Services: An ADF feature that allows users to create connections to external data sources or services, such as SQL Server, Azure Blob Storage, or Salesforce.

- Data Flows: An ADF feature that allows users to visually create and manage data transformations using a drag-and-drop interface.

- Activities: The basic building blocks of an ADF pipeline that represent a single action, such as copying data, executing a stored procedure, or running a script.

- Triggers: An ADF feature that allows users to schedule the execution of pipelines on a recurring basis or based on specific events.

- Data Factory Integration Runtime (IR): A compute infrastructure used by ADF to run data integration activities and transform data. It can be deployed on Azure or on-premises.

What are the features of Azure Data Factory?

There are various features of the Azure Data Factory. Some of the unique one’s are:

1. Accelerating data transformation with code-free data flows

Data Factory offers a data integration and transformation layer for working over digital transformation initiatives. That is to say:

- Firstly, enabling citizen integrators and data engineers for driving business and the IT-led Analytics/BI.

- Secondly, preparing data, constructing ETL and ELT processes, and orchestrating and monitoring pipelines code-free.

- Lastly, transforming faster with intelligent intent-driven mapping that automates copy activities.

2. Rehosting and increasing SSIS in a few clicks

Azure Data Factory can help organizations that are looking to modernize SSIS. As it can:

- Firstly, realize up to 88 percent cost savings with the Azure Hybrid Benefit.

- Secondly, provides a compatible service for making it easy to move all your SSIS packages to the cloud.

- Lastly, makes the migration easy with the deployment wizard and realize your vision for hybrid big data and data warehousing initiatives with Data Factory cloud data pipelines.

3. Ingesting all your data with built-in connectors

Ingesting data from multiple sources can be expensive, time-consuming, and require multiple solutions. Azure Data Factory offers a single, pay-as-you-go service in which you can:

- Firstly, choose from more than 90 built-in connectors for acquiring data from various Big Data sources like Amazon Redshift, Google BigQuery, and more.

- Secondly, make use of full capacity underlying network bandwidth, up to 5 GB/s throughputs.

4. Using Azure Synapse Analytics

Ingesting data from on-premises, hybrid, and multi-cloud sources and transform it with powerful data flows in Azure Synapse Analytics, powered by Data Factory. You can:

- Firstly, integrate and transform data in the familiar Data Factory experience within Azure Synapse Pipelines

- Secondly, transform and analyze data code-free with Data flows within the Azure Synapse studio.

5. Igniting app experiences with the right data

Data Factory can help independent software vendors (ISVs) for enriching SaaS apps with integrated hybrid data for delivering data-driven user experiences. Pre-built connectors and integration at scale are useful for enabling you to focus on your users while Data Factory takes care of the rest.

6. Orchestrating, monitoring, and managing pipeline performance

Azure Data Factory lets you monitor all your activity runs visually and improve operational productivity by setting up alerts proactively to monitor your pipelines. These alerts can then appear within Azure alert groups, ensuring that you are notified in time for preventing downstream or upstream problems before they happen.

Using Azure Data Factory for Data Integration

Azure Data Factory (ADF) is a powerful tool for data integration, enabling users to move and transform data from various sources to various destinations. In this section, we will provide an overview of how to use ADF to create a data pipeline, integrate data from various sources, and transform it for analysis.

Creating a Data Pipeline To create a data pipeline in ADF, you must first create a new pipeline and then add activities to it. Activities represent the basic building blocks of a pipeline, such as copying data, executing a stored procedure, or running a script.

Integrating Data from Various Sources ADF provides several options for integrating data from various sources, including Azure Blob Storage, SQL Server, and Salesforce. Linked Services is an ADF feature that allows users to create connections to external data sources or services, making it easy to integrate data from different sources.

Transforming Data for Analysis Data transformation is an essential aspect of data integration, enabling users to convert data from one format to another to make it more usable and relevant for analysis. ADF’s Data Flows feature provides a visual interface for creating and managing data transformations, allowing users to apply a range of transformations, such as filtering, aggregation, and join operations.

Moving Data to Various Destinations ADF can move data to various destinations, including Azure Synapse Analytics, Power BI, and SQL Server. Users can choose from various activities, such as Copy Data and Execute SSIS Package, to move data to these destinations.

Conclusion ADF is a powerful tool for data integration, enabling users to create data pipelines, integrate data from various sources, transform data for analysis, and move data to various destinations. With its drag-and-drop interface and support for a wide range of data sources and destinations, ADF can help organizations improve their data integration and transformation capabilities.

Azure Data Factory: Working Process

Data Factory consists of series of interconnected systems for providing a complete end-to-end platform for data engineers.

1. Connect and collect

Enterprises contain various types of data in disparate sources on-premises, in the cloud, structured, unstructured, and semi-structured. These all arrive at different intervals and speeds. However, building an information production system, firstly, connect to all the required sources of data and processing, such as software-as-a-service (SaaS) services, databases, file shares, and FTP web services. Then, move the data as required to a centralized location for subsequent processing.

Further, with Data Factory, you can use the Copy Activity in a data pipeline to moving data from both on-premises and cloud source data stores to a centralization data store in the cloud for further analysis.

2. Transform and enrich

Firstly, process or transform the data collected by using ADF mapping data flows when the data is present in a centralized data store in the cloud. However, data flows enable data engineers for building and maintaining data transformation graphs that execute on Spark without needing to understand Spark clusters or Spark programming.

3. CI/CD and publish

Data Factory provides full support for CI/CD of your data pipelines using Azure DevOps and GitHub. This allows for developing and delivering your ETL processes before publishing the finished product. After refining the raw data into a business-ready consumable form, load the data into Azure Data Warehouse, Azure SQL Database, Azure CosmosDB, or any analytics engine.

4. Monitor

After building and deploying a data integration pipeline, start monitoring the scheduled activities and pipelines for success and failure rates. However, there is built-in support for pipeline monitoring in Azure Data Factory via Azure Monitor, API, PowerShell, Azure Monitor logs, and health panels on the Azure portal.

Moving on, in the next section, we will learn about the major concepts of the Azure Data Factory.

Azure Data Factory Important Concepts

The essential components of Azure Data Factory are listed below. These components, on the other hand, work together to provide a framework for composing data-driven processes.

1. Pipeline

There can be one or more pipelines in a data factory. However, a pipeline refers to a logical grouping of activities used for performing a unit of work. It allows managing the activities as a set. Further, the activities in a pipeline can be secured together for operating sequentially or independently in parallel.

2. Activity

Activities display a processing step in a pipeline. For example, you use a copy activity to copy data from one datastore to another data store. In the same way, you can use a Hive activity running a Hive query on an Azure HDInsight cluster for transforming or analyzing your data. There are three types of activities that Data Factory supports:

- Data movement activities

- Data transformation activities

- Control activities.

3. Datasets

Datasets are the data structures within the data stores. These simply point to or reference the data you want to use in your activities as inputs or outputs.

4. Linked services

Linked services refer to a connection string used for defining the connection information required for Data Factory for connecting to external resources. Further, linked services are used for two purposes in Data Factory:

- Firstly, for representing a data store that includes a SQL Server database, Oracle database, file share, or Azure blob storage account.

- Secondly, for representing a compute resource that can host the execution of an activity.

4. Control flow

Control flow refers to an orchestration of pipeline activities that includes:

- Firstly, chaining activities in a sequence

- Secondly, defining parameters at the pipeline level

- Lastly, passing arguments while invoking the pipeline on-demand or from a trigger.

Further, it also includes custom-state passing and looping containers, that is, For-each iterators.

5. Integration Runtime (IR)

This refers to a compute infrastructure Data Factory uses for providing data integration capabilities across network environments. IR helps in moving data between the source and destination data stores by providing scalable data transfer. Further, it executes Data Flow authored visually in a scalable way on Spark compute runtime. And it also provides the capability to natively execute SSIS packages in a managed Azure compute environment.

Above we have learned and understood the overview, features, and services of Azure Data Factory. Now, it’s time to do something productive. In the next section, we will learn about creating a data factory.

Creating a data factory by using the Azure Data Factory UI

For creating Data Factory instances, the user account must be a member of one of the following:

- Contributor

- Owner role

- The administrator of the Azure subscription.

Azure Storage account

Get the storage account name

For getting the name of your storage account:

- Firstly, go to the Azure portal and sign in using your Azure username and password.

- Secondly, select All services from the Azure portal menu. Then select Storage and click on Storage accounts.

- Lastly, filter for your storage account on the Storage accounts page. Then, select your storage account.

Create a blob container

- Firstly, select Overview from the storage account page. Then, click on Containers.

- Secondly, select Container from the <Account name> Containers page’s toolbar.

- Lastly, enter adftutorial for the name in the New container dialog box. Then select OK.

Adding an input folder and file for the blob container

Before beginning, open a text editor such as Notepad, and create a file named emp.txt with the following content:

emp.txt

John, Doe

Jane, Doe

Then, save the file in the C:\ADFv2QuickStartPSH folder. After that, follow these steps in Azure Portal:

- Firstly, in the <Account name>, Containers page where you left off, select adftutorial from the updated list of containers.

- However, if you closed the window then, sign in to the Azure portal again. Then, from the Azure portal menu, select All services. And, select Storage then, Storage accounts. Now, select your storage account, and then select Containers and click adftutorial.

- Secondly, select Upload on the adftutorial container page’s toolbar.

- Thirdly, select the Files box on the Upload blob page. Then, browse and select the emp.txt file.

- Now, expand the Advanced heading.

- After that, enter input in the Upload to folder box.

- Then, select the Upload button.

- Lastly, select the Close icon for closing the Upload blob page.

Creating a data factory

- Firstly, launch Microsoft Edge or Google Chrome web browser.

- Secondly, open the Azure portal.

- Thirdly, select Create a resource from the Azure portal menu.

- Fourthly, select Integration, and then select Data Factory.

- Now, select your Azure Subscription for creating the data factory on the Create Data Factory page, under the Basics tab.

- After that, for Resource Group, take one of the following steps:

- Firstly, select an existing resource group from the drop-down list.

- Secondly, select Create new, and then, enter the name of a new resource group.

- Next, select the location for the data factory for Region. Here, you will see the list showing locations that Data Factory supports, and where your Azure Data Factory metadata will be stored.

- Then, for Name, enter ADFTutorialDataFactory. However, the name of the Azure data factory must be unique.

- After that, select V2 for Version.

- Next, select Next: Git configuration. And then, select Configure Git later check box.

- Now, select Review + create. And, then, select Create after the validation is passed. After completing the creation, select Go to resource for navigating to the Data Factory page.

- Lastly, select the Author & Monitor tile for starting the Azure Data Factory user interface (UI) application on a separate browser tab.

Creating a linked service

The linked service contains the connection information that the Data Factory service uses at runtime for connecting to it.

- Firstly, open the Manage tab on the Azure Data Factory UI page.

- Secondly, select +New for creating a new linked service on the Linked services page

- Thirdly, select Azure Blob Storage on the New Linked Service page. And, then, select Continue.

- Lastly, complete the following steps on the New Linked Service (Azure Blob Storage) page:

- Firstly, for Name, enter AzureStorageLinkedService.

- Secondly, for the Storage account name, select the name of your Azure Storage account.

- Then, select Test connection for confirming that the Data Factory service can connect to the storage account.

- Lastly, select Create for saving the linked service.

Creating datasets

Here, you create two datasets that are type AzureBlob:

1. InputDataset

- Firstly, the input dataset represents the source data in the input folder. In this, you specify the blob container (adftutorial), the folder (input), and the file (emp.txt) that contain the source data.

2. OutputDataset

- The output dataset displays the data that is copied to the destination. In this, you specify the blob container (adftutorial), the folder (output), and the file to which the data is copied. However, each run of a pipeline has a unique ID associated with it. Further, you can access this ID by using the system variable RunId.

For creating datasets:

- Firstly, select the Author tab.

- Secondly, select the + (plus) button. Then, select Dataset.

- Thirdly, select Azure Blob Storage on the New Dataset page. And, then, select Continue.

- Fourthly, choose the format type of your data on the Select Format page. And then, select Continue.

- Now, complete the following steps on the Set Properties page:

- Firstly, enter InputDataset under Name.

- Secondly, select AzureStorageLinkedService for Linked service.

- Thirdly, select the Browse button for the File path.

- Lastly, browse to the input folder in the adftutorial container in the Choose a file or folder window. Then, select the emp.txt file, and then select OK.

- Now, repeat the steps for creating the output dataset:

- Firstly, select the + (plus) button. Then, select Dataset.

- Secondly, select Azure Blob Storage on the New Dataset page. And then select Continue.

- Thirdly, choose the format type of your data on the Select Format page. And then select Continue.

- Then, specify OutputDataset for the name on the Set Properties page. Here, select AzureStorageLinkedService as a linked service.

- Lastly, enter adftutorial/output under the File path. However, if the output folder doesn’t exist, then, the copy activity creates it at runtime. And, select OK.

Creating a pipeline

Here, we will create and validate a pipeline with a copy activity using the input and output datasets. In which, the copy activity copies data from the file specified in the input dataset settings to the file specified in the output dataset settings. However, if the input dataset specifies only a folder then, the copy activity copies all the files in the source folder to the destination.

- Firstly, select the + (plus) button. Then, select Pipeline.

- Secondly, specify CopyPipeline for Name in the General panel under Properties. Then collapse the panel. For this, click the Properties icon in the top-right corner.

- Thirdly, expand Move & Transform in the Activities toolbox. Now, drag the Copy Data activity from the Activities toolbox to the pipeline designer surface.

- After that, switch to the Source tab in the copy activity settings. Then, select InputDataset for Source Dataset.

- Next, switch to the Sink tab in the copy activity settings. And, now, select OutputDataset for Sink Dataset.

- Lastly, click Validate on the pipeline toolbar above the canvas for validating the pipeline settings. For closing the validation output, select the Validation button in the top-right corner.

Azure Data Factory Pricing

Microsoft Azure has pay only for what you need a policy in which there is no upfront cost. This offers a range of cloud data integration capabilities for fitting your scale, infrastructure, compatibility, performance, and budget requirements.

1. Pricing for Data pipelines

Integrating data from cloud and hybrid data sources, at scale.

Pricing starts from:

₹72.046 / 1,000 activity runs per month

2. Pricing for SQL Server Integration Services

Moving the existing on-premises SQL Server Integration Services projects easily to a fully-managed environment in the cloud.

For SQL Server Integration Services integration runtime nodes, pricing starts from:

₹60.498 /hour

Final Words

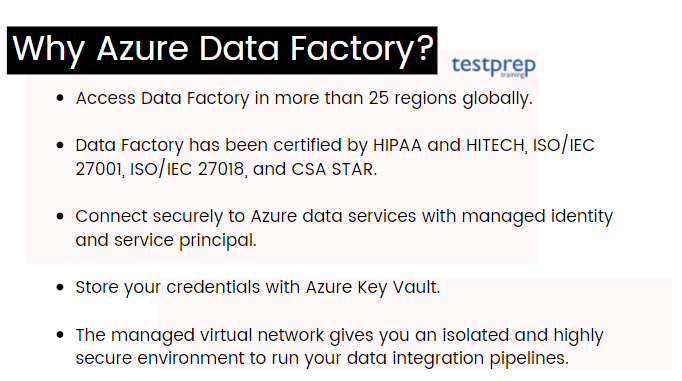

Above we have understood the overview and related services of Azure Data Factory. You must also know that having the capability to provide a data-rich digital experience, Data Factory is available in more than 25 regions. Many top companies like Adobe and Concentre are using Azure Data Factory which helps them in:

- Firstly, relying on own core competencies, and not have to build the underlying infrastructure.

- Secondly, creating an end-to-end data management process through SSIS ETL workloads in a managed SSIS environment within Data Factory.

However, this service is benefiting more than it can by providing an easy, reliable, and intuitive visual environment. So, go through the article to gain an understanding of the Data Factory services and explore the Microsoft documentation for getting started with it. I hope the above information will help you in starting your journey for the Azure Data Factory.