Amazon SageMaker connects to the s3 bucket using Jupyter Notebook and Python with boto, or it offers its high-level Python API for model creation. The model development time is reduced due to interoperability with recent deep learning frameworks such as TensorFlow, PyTorch, and MXNet.

Advantages of SageMaker:

- In training, it employs a debugger with a predefined range of hyperparameters.

- Aids in the rapid deployment of an end-to-end ML pipeline.

- Additionally, It is beneficial to deploy ML models at the edge using SageMaker Neo.

- While executing the training, the ML compute instance offers the instance type.

What is Machine Learning Pipeline?

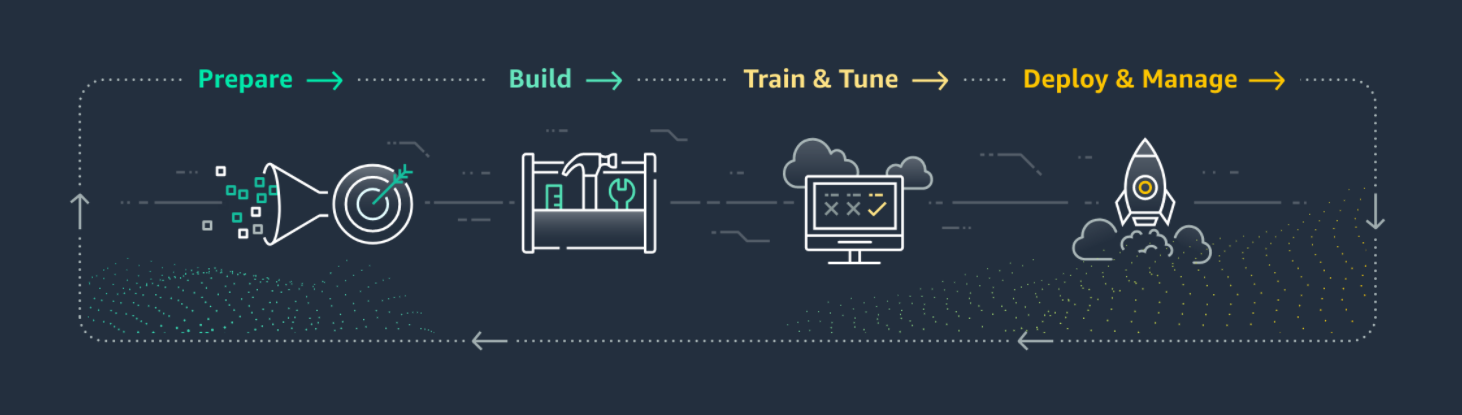

The execution mechanism for a machine learning job is called a Machine Learning Pipeline. It helps with ML process optimization, development, and management. The following are the characteristics of the machine learning pipeline:

Get real-time data from Kafka streams or data repositories by fetching data. The data must be in an AWS s3 bucket for SageMaker to set up the training task.

Data Wrangling data and prepping it for training is what pre-processing includes. Data wrangling is one of the most time-consuming components of a machine learning project. Amazon SageMaker Processing may be used by running tasks to pre-process data for training and post-process data for inference, feature engineering, and model evaluation at scale.

Model Training — Data is prepared for both training and testing using the pre-processing pipeline. Popular algorithms are already included in Amazon SageMaker. Import the library and use it. The current Amazon SageMaker training pipeline is as follows:

- Import the training data from the Amazon S3 bucket.

- The training begins by instructing the ML to access compute instances stored in the EC2 container registry.

- The trained model artefacts are stored in the model artefacts s3 bucket.

Why is Amazon SageMaker Important?

Amazon SageMaker has various useful tools that help to streamline the ML workflow. The following are some examples:

Model Evaluation- Using SageMaker, you can evaluate a trained model in two ways: offline testing or online testing. Requests are made using the Jupyter notebook’s endpoint on historical data (previously separated data) by validation set or utilising cross-validation during offline testing. The model is deployed in online testing, and a traffic threshold is configured to handle requests. If everything is operating well, the traffic threshold is set to 100 per cent.

Model Deployment- The model has crossed the baseline, and it’s time to deploy it: the trained model artefacts path and the inference code Docker registry path. The model in SageMaker may be constructed by using the CreateModel API, defining the setup of an HTTPS endpoint, and then generating it.

Monitoring- The model’s performance is tracked in real-time, the raw data is recorded in S3, and the performance deviation is calculated. This will provide the instance where the drift began; it is then trained on subsequent data and saved in real-time in a bucket.

Data Preparation using SageMaker

A machine learning model is fully dependent on data. The model will be more efficient if the data is of higher quality.

Data labelling is not difficult with Amazon SageMaker. The user can choose between a private, public, or vendor workforce. In the private and vendor environments, the user executes the labelling process on its own or using third-party APIs, and some confidentiality agreements are required. In the public workforce, an Amazon Mechanical Turk Workforce service produces a labelling task and reports the success or failure of labelled jobs. The steps are as follows:

- Put data in the s3 bucket and create a manifest file that will be used by the labelling task.

- Select the workforce type to create a labelling workforce.

- Create a labelling task by selecting a job type like Image Classification, Text Classification, Bounding Box, and so on.

- For example, if the selected task is Bounding Box, then draw a bounding box around your desired object and label it. View the confidence score and other data to help you visualise your results.

Hyperparameter Tuning at SageMaker

parameters that define the architecture of the model These are known as hyperparameters and the process of determining the best model architecture is known as hyperparameter tuning. It comprises of the following methods:

Random Search- As the name says, a random selection of hyperparameter combinations are made, and a training task is executed on it. SageMaker supports the parallel execution of tasks to identify the optimum hyperparameter without interfering with the current training job.

SageMaker’s Bayesian Search algorithm is also available. The algorithm checks the performance of previously used hyperparameter combinations in a task and then investigates the new combination using the given list.

Steps for Hyperparameter Tuning

- When creating a hyperparameter tuning work, the measurements are supplied for evaluating a training task. Only 20 criteria may be defined for a single operation; each parameter has a unique name and a regular expression to extract information from logs.

- The parameter type’s declared hyperparameter ranges, i.e., a distinction between the parameter type in the ParameterRanges JSON object.

- Create a SageMaker notebook and connect to SageMaker’s Boto3 client.

- Set the bucket and data output location, and then run the specified hyperparameter tuning task from steps 1 and 2.

- Monitor the progress of hyperparameter tuning tasks that are running concurrently and locate the best model on SageMaker’s interface by clicking on the best training job.

Best practices for Amazon Sagemaker

Specifying the number of parameters: SageMaker permits the usage of 20 parameters in a hyperparameter tuning task to limit the search space and discover the optimal variables for a model.

Defining the hyperparameter range: Defining a greater range for hyperparameters enables the best possible values to be found, but it is time-consuming. Limit the range of advantages and the search space for that range to get the greatest value.

Logarithmic scaling of hyperparameters: If the search space is small, specify the scaling of hyperparameters as linear. If the search space is big, utilise logarithmic scaling to reduce task execution time.

Identifying the optimal number of concurrent training jobs: Concurrent jobs allow for faster completion of tasks, but tuning jobs are dependent on the outcomes of earlier runs. In other words, performing a job can produce the best outcomes while consuming the least amount of processing time.

Running training jobs on many instances: The last-reported objective metric is used when running a training job on multiple instances. Create a distributed training task architecture to examine all of the parameters and obtain the logs of the required measure.

What is Amazon SageMaker Studio?

SageMaker Studio is a machine learning development environment featuring a fully-fledged Integrated Development Environment (IDE). It is a synthesis of all of SageMaker’s core features. Furthermore, In SageMaker Studio, the user may create code in a notebook environment, do visualisation, debugging, model tracking, and model performance monitoring all in a single window. Additionally, It makes use of the SageMaker capabilities listed below.

Amazon SageMaker Debugger

The SageMaker debugger will keep track of the feature vectors and hyperparameters’ values. Additionally, Check the exploding tensors, investigate the disappearing gradient issues, and store the tensor values in the s3 bucket after storing the debug task logs in CloudWatch. Also, by including SaveConfig from the debugger SDK at the point where the tensor values must verify, and SessionHook will attach at the start of each debugging job executed.

Amazon SageMaker Model Monitor

The SageMaker model checks model performance by analysing data drift. The stated restrictions and feature statistics file is in JSON. The constraint.json file provides a list of features together with their types, and the needed status is defined by the completeness field, which has a value between 0 and 1, as well as statistics.json. For each feature, the file provides the mean, median, quantile, and so on. The reports are stored in S3 and get examined in detail in constraint violations.json, which includes feature names and the kind of violation (data type, min or max value of a feature, etc.)

Amazon SageMaker Model Experiment

SageMaker makes it easy to track several experiments (training, hyperparameter tweaking tasks, etc.). Simply create an Estimator object and log the results of the trial. It is possible to import values from the experiment into a pandas data frame, which simplifies analysis.

Amazon SageMaker AutoPilot

ML with AutoPilot is only a mouse click away. Set the data flow and the kind of target attribute (regression, binary classification, or multi-class classification). If the target type is available, the built-in algorithms will determine it and execute the data preparation and model accordingly. The data preparation stage creates Python code that may be used for subsequent jobs. It is used in a specific custom pipeline. Explain the AutoMlJob API.

Security at SageMaker

AWS uses a shared responsibility model for cloud security, which includes AWS security in the cloud for securing the infrastructure and security of the cloud, which includes the services chosen by a customer, IAM key management, and privileges to different users, keeping the credentials secure, and so on.

Data Security: SageMaker encrypts data and models artefacts in transit and at rest. Furthermore, a secure (SSL) connection to the Amazon SageMaker API and console is requested. AWS KMS (Key Management Service) key encrypt notebooks and scripts. If the key is not accessible, the temporary key encrypt the data. This transitory key is rendered obsolete after decryption.

Conclusion

Each SageMaker customer is charged by AWS for the computing and storage; And data processing tools required to develop, train, run and log machine learning models and predictions, as well as the S3 expenses for maintaining the data sets used for training and continuing forecasts. Furthermore, The SageMaker framework’s architecture enables the end-to-end lifecycle of ML applications, from model data development through model execution, and its scalable structure makes it adaptable. That is, you may utilise SageMaker for model building, training, and deployment separately.