Amazon is one of the top leading companies that has always surprised its customer and clients by providing all kinds of services for getting growth and success. The same goes with the storage department, Amazon came with Simple Storage Service which is better known as Amazon S3.

Amazon S3 refers to an object storage service that is providing scalability, data availability, security, and performance to leading industries. This service can be used by customers of all sizes and industries for storing and protecting any amount of data for a range of use cases. The use cases can be for data lakes, websites, big data analytics, backup and restore, archive, enterprise applications, mobile applications, and, IoT devices. To get the most out of Amazon S3, in this blog we will learn about the features, working, and other important details about this storage service to understand its worth. So, let’s begin!

What is Amazon S3?

Amazon S3 services provide usable management features for helping in organizing your data as well as in configuring access controls for meeting your specific business, organizational, and compliance requirements. Moreover, this is designed for providing 99.9% durability, and stores data for millions of applications for various companies globally.

Further, you can use Amazon S3 for storing and retrieving any amount of data at any time and from anywhere on the web. These tasks can be completed using the AWS Management Console that comes with a simple and intuitive web interface.

- Here, you need to understand that Amazon S3 stores data as objects within buckets.

- Secondly, an object in this contains a file and optionally any metadata explaining that file.

- Thirdly, for storing an object in Amazon S3, you have to upload the file you want to store to a bucket. After uploading a file, you can set permissions on the object and any metadata.

- Next, buckets here refer to the containers for objects. You can have one or more buckets. However, for each bucket,

- Firstly, you can control access by knowing who can create, delete, and list objects in the bucket

- Secondly, you can view access logs for it and its objects

- Lastly, you can choose the geographical region where Amazon S3 will store the bucket and its contents.

Moving on, now we will talk about the Amazon S3 important features that make it more unique and on top in the market.

Amazon S3 Benefits and Features

What are the benefits of Amazon S3?

- Firstly, Amazon S3 provides leading performance, scalability, availability, and durability. This scales your storage resources up and down for meeting differing demands, without upfront investments or resource procurement cycles.

- Secondly, it provides a wide range of cost-effective storage classes. This service saves costs without giving up performance by storing data across the S3 Storage Classes. This class supports various data access levels at corresponding rates. Moreover, you can use S3 Storage Class Analysis for discovering data that should move to a lower-cost storage class based on access patterns, and configure an S3 Lifecycle policy to execute the transfer.

- Thirdly, it offers security, compliance, and audit capabilities. Data stored in Amazon S3 is secured from unauthorized access using the encryption features and access management tools. S3 has the ability to block public access to all of your objects at the bucket or the account level with S3 Block Public Access. Moreover, it maintains compliance programs like PCI-DSS, HIPAA/HITECH, FedRAMP, EU Data Protection Directive, and FISMA, for meeting regulatory requirements.

- Next, it easily manages data and access controls. This provides robust capabilities for managing access, cost, replication, and data protection. S3 Access Points provide manageable data access with specific permissions for your applications using a shared data set.

- Moving on, it offers Query-in-place and process on-request. That is to say, you can run big data analytics across your S3 objects using query-in-place services. Use Amazon Athena for querying S3 data with standard SQL expressions and Amazon Redshift Spectrum for analyzing data that is stored across your AWS data warehouses and S3 resources.

- Lastly, this is the most supported cloud storage service. As it helps in storing and protecting your data in Amazon S3 by working with a partner from the AWS Partner Network (APN).

What are the Features of Amazon S3?

Amazon S3 comes consists of features that help in organizing and managing data for supporting specific use cases, enabling cost efficiencies, enforcing security, and meeting compliance requirements.

1. Storage management and monitoring

- Amazon S3 is helping customers and industries in organizing their data by providing value to their businesses and teams. All objects are stored in S3 buckets. Further, they can be organized with shared names known as prefixes.

- Secondly, you can also add up to 10 key-value pairs that are known as S3 object tags. These tags are for each object that can be created, updated, and deleted throughout an object’s lifecycle.

- Thirdly, to keep track of objects and their respective tags, buckets, and prefixes, you can use an S3 Inventory report.

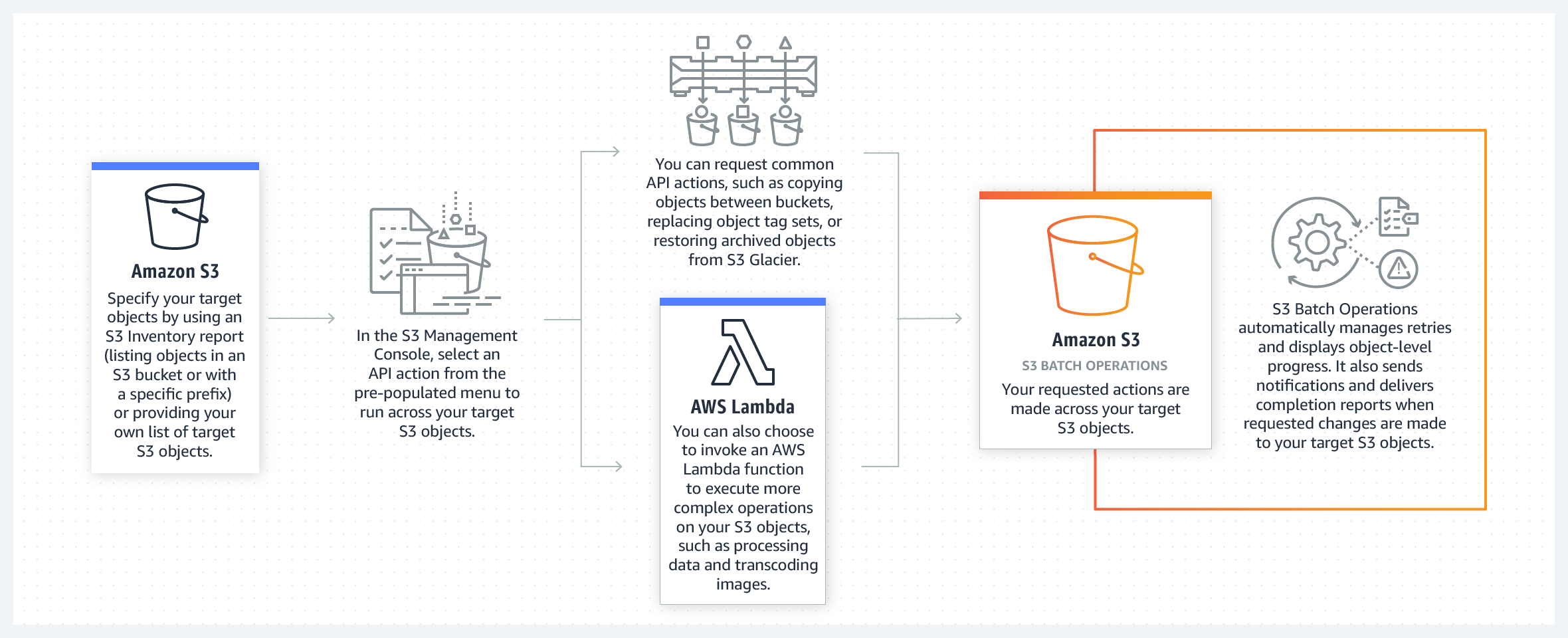

- Fourthly, it provides S3 Batch Operations for making it simple to manage your data in Amazon S3 at any scale. Using S3 Batch Operations, you can

- copy objects between buckets

- replacing object tag sets

- modifying access controls

- restoring archived objects from Amazon S3 Glacier, with a single S3 API request or a few clicks in the Amazon S3 Management Console.

- Lastly, you can also use S3 Batch Operations for running AWS Lambda functions across your objects to execute custom business logic, such as processing data or transcoding image files.

2. Storage Analytics and Insights

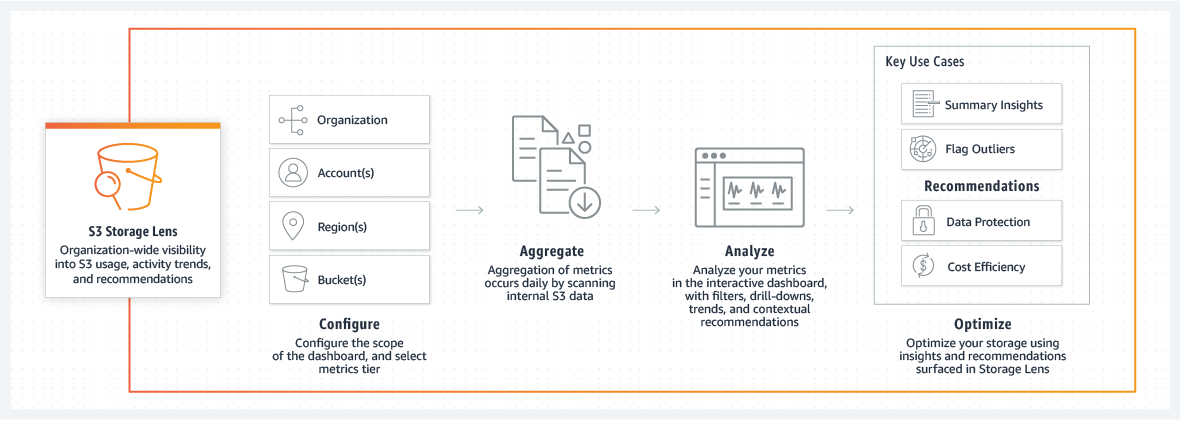

- S3 Storage Lens

S3 Storage Lens is for implementing organization-wide visibility into object storage usage and activity trends. Moreover, it makes actionable recommendations for improving cost-efficiency and applies data protection best practices. This is the first cloud storage analytics solution for providing a single view of object storage usage and activity across hundreds, or even thousands for generating insights at the account, bucket, or even prefix level.

- S3 Storage Class Analysis

Amazon S3 Storage Class Analysis is used for analyzing storage access patterns for helping you in deciding the transition of the right data for the right storage class. Moreover, this observes data access patterns for helping you determine when to transition less frequently accessed storage to the lower-cost storage class. Further, you can also use the results to help improve your S3 Lifecycle policies as well as to configure storage class analysis for analyzing all the objects in a bucket.

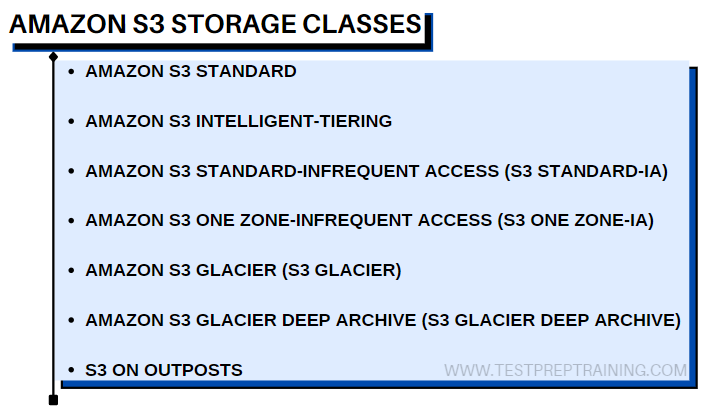

3. Storage classes

- At corresponding costs or geographic location, every S3 Storage Class supports a specific data access level. That is to say, it can store mission-critical production data in S3 Standard for frequent access. And, it also saves costs by storing infrequently accessed data in S3 Standard-IA or S3 One Zone-IA. Lastly, it archives data at the lowest costs in the archival storage classes — S3 Glacier and S3 Glacier Deep Archive.

- However, if you have data residency requirements that cannot be met by an existing AWS Region. Then, use the S3 Outposts storage class for storing your S3 data on-premises using S3 on Outposts.

- Further, you can store data with changing or unknown access patterns in S3 Intelligent-Tiering. This automatically moves your data based on changing access patterns between two low latency access tiers optimized for frequent and infrequent access. However, when subsets of objects become rarely accessed over long periods of time, then, you can activate two archive access tiers designed for asynchronous access that are optimized for archive access.

4. Access management and security

Access management

For keeping data safe in Amazon S3, by default, users can only access the S3 resources which they create. However, you can grant access to other users by using one or a combination of the following access management features:

- Firstly, AWS Identity and Access Management (IAM) for creating users and manage their respective access

- Secondly, Access Control Lists (ACLs) for making individual objects accessible to authorized users

- Thirdly, Bucket policies for configuring permissions for all objects within a single S3 bucket

- Then, S3 Access Points for simplifying managing data access to shared data sets by creating access points with names and permissions specific to each application or sets of applications

- Lastly, Query String Authentication for granting time-limited access to others with temporary URLs.

Security

- Amazon S3 offers flexible security features for blocking unauthorized users from accessing your data. It includes VPC endpoints for connecting to S3 resources from your Amazon Virtual Private Cloud and from on-premises. Moreover, Amazon S3 supports S3 Block Public Access which is a security control for ensuring that S3 buckets and objects do not have public access. Using a few clicks in the Amazon S3 Management Console, you can apply the S3 Block Public Access settings to all buckets within your AWS account or to specific S3 buckets.

- After applying the settings to an AWS account, any existing or new buckets and objects associated with that account inherit the settings preventing public access. Moreover, it can override other S3 access permissions so that the account administrator enforces “no public access”.

- Further, the S3 Block Public Access controls are auditable, provide a layer of control, and use AWS Trusted Advisor bucket permission checks, AWS CloudTrail logs, and Amazon CloudWatch alarms.

5. Data processing

- S3 Object Lambda

S3 Object Lambda allows you to add your own code in S3 GET requests for modifying and processing data as it is returned to an application. The S3 Object Lambda uses AWS Lambda functions for automatically processing the output of a standard S3 GET request. Here, AWS Lambda refers to a serverless compute service for running customer-defined code without the requirement of management for underlying compute resources. With just a few clicks in the AWS Management Console, the configuration of the Lambda function can be done. After that, attach it to an S3 Object Lambda Access Point. From that point forward, S3 will automatically call your Lambda function for processing any data retrieved through the S3 Object Lambda Access Point, returning a transformed result back to the application. Moreover, you can execute your own custom Lambda functions. Thus, customizing S3 Object Lambda’s data transformation to your specific use case.

6. Query in place

Amazon S3 has a built-in feature that queries data without needing to copy and then, load it into a separate analytics platform or data warehouse. That is to say, you can directly run big data analytics on your data stored in Amazon S3. S3 Select is an S3 feature designed for increasing query performance by up to 400% and reducing querying costs by as much as 80%.

However, Amazon S3 has compatibility with AWS analytics services that are:

- Firstly, Amazon Athena. This is for querying data in Amazon S3 without extracting and loading it into a separate service or platform.

- Secondly, Amazon Redshift Spectrum. This directly runs SQL queries against data at rest in Amazon S3 and is good for complex queries and large data sets.

7. Data transfer

AWS provides a portfolio of data transfer services for providing the right solution for any data migration project. It offers:

- Firstly, Hybrid cloud storage. AWS Storage Gateway is a hybrid cloud storage service that lets you connect and extend your on-premises applications to AWS Storage.

- Secondly, Online data transfer. AWS DataSync is well-efficient for transferring hundreds of terabytes and millions of files into Amazon S3. DataSync automatically handles or removes various manual tasks, including

- scripting copy jobs

- scheduling

- monitoring transfers

- validating data

- optimizing network utilization

- Then, Offline data transfer. The AWS Snow Family is built for using it in edge locations where network capacity is constrained or nonexistent. This provides storage and computing capabilities in harsh environments as well. However, the AWS Snowball service uses portable storage and edge computing devices for collecting, processing, and migration of data.

8. Performance

- Amazon S3 provides performance for cloud object storage in the leading industry. It supports parallel requests for scaling S3 performance by the factor of the computing cluster. This process is done without making any customizations to your application.

- Secondly, performance scales per prefix. That is to say, you can use as many prefixes as you need in parallel for achieving the needed throughput. Further, Amazon S3 performance supports at least 3,500 requests per second for adding data and 5,500 requests per second for retrieving data.

- Lastly, for achieving this S3 request rate performance there is no need for randomizing object prefixes for achieving faster performance. That is to say, without any performance implications, you can use logical or sequential naming patterns in S3 object naming.

Working of S3 features

1. S3 Object Lambda

S3 Object Lambda provides access to add your own code for processing data retrieved from S3 before returning to an application.

.4c44ce58a2df0aad0e3be25a31e24c56514aac1f.png)

2. S3 Storage Lens

The storage lens helps in gaining organization-wide visibility into storage usage, activity trends, and receive actionable recommendations.

3. S3 Intelligent-Tiering

You can optimize storage costs using the S3 Intelligent-Tiering.

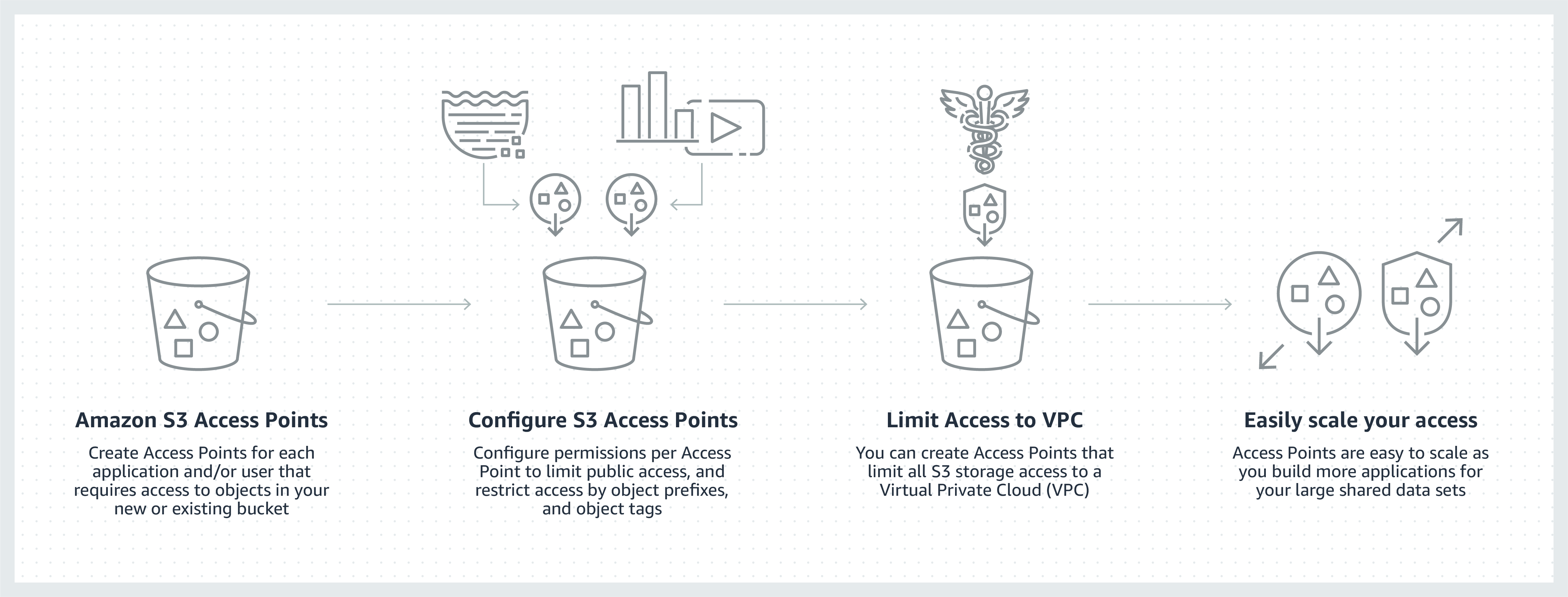

4. Amazon S3 Access Points

The Amazon S3 Access Points helps in easily managing access for shared data sets.

5. S3 Batch Operations

S3 Batch Operations helps in managing tens to billions of objects at scale.

6. S3 Block Public Access

Using the S3 Block Public Access you can block all public access to your S3 data, now and in the future.

Amazon S3 Use cases

1. Backup and restore

Use Amazon S3 and other AWS services, such as S3 Glacier, Amazon EFS, and Amazon EBS for building scalable, durable, and secure backup and restore solutions. And, augment or replace existing on-premises capabilities. However, AWS and APN partners can help you meet Recovery Time Objectives (RTO), Recovery Point Objectives (RPO), and compliance requirements. Using AWS, it is possible to back up data that is already in the AWS Cloud. Another option can be to use AWS Storage Gateway. This is a hybrid storage service for sending backups of on-premises data to AWS.

2. Disaster recovery (DR)

Protect critical data, applications, and IT systems that are running in the AWS Cloud or in your on-premises environment without collecting the expense of a second physical site. Moreover, with Amazon S3 storage, S3 Cross-Region Replication, and other AWS compute, networking, and database services, you can create DR architectures for quickly recovering from outages caused by natural disasters, system failures, and human errors.

3. Archive

Archive data using the S3 Glacier and S3 Glacier Deep Archive. These S3 Storage Classes maintain objects long-term at the lowest rates. Simply create an S3 Lifecycle policy for archiving objects throughout their lifecycles, or uploading objects directly to the archival storage classes.

However, with S3 Object Lock, you can apply retention dates to objects for protecting them from deletions, and meet compliance requirements. On the other hand, S3 Glacier provides restoring of archived objects in one minute for advanced retrievals and 3-5 hours for standard retrievals.

4. Data lakes and big data analytics

This is for accelerating innovation by creating a data lake on Amazon S3 and bringing out valuable insights by using query-in-place, analytics, and machine learning tools. Use S3 Access Points for configuring access to your data, with specific permissions for each application or set of applications. Moreover, you can also use AWS Lake Formation for creating a data lake, and centrally defining and enforcing security, governance, and auditing policies.

Further, the service gathers data across your databases. And then, S3 resources move it into a new data lake in Amazon S3 and cleans it using machine learning algorithms.

5. Hybrid cloud storage

Setting up private connectivity between Amazon S3 and on-premises with AWS PrivateLink. You can provision private endpoints in a VPC for allowing direct access to S3 from on-premises using private IPs from your VPC. However, AWS Storage Gateway allows you to connect and extend your on-premises applications to AWS Storage while caching data locally for low-latency access. This also allows you to automate data transfers between on-premises storage. It can also include data transfers from S3 on Outposts, and Amazon S3 by using AWS DataSync.

Further, by using AWS Transfer Family, you can directly transfer files in and out of Amazon S3. This is a fully managed, simple, and seamless service that enables secure file exchanges with third parties using SFTP, FTPS, and FTP.

6. Cloud-native applications

Use AWS services and Amazon S3 for developing fast, cost-effective mobile and internet-based applications. This further, is used for storing development and production data shared by the microservices making up cloud-native applications. With Amazon S3, you can upload any amount of data and access it anywhere for deploying applications faster and reach more end users. However, storing data in Amazon S3 means you have access to the latest AWS developer tools, S3 API, and services for machine learning and analytics to innovate and optimize your cloud-native applications.

Case studies: Top Companies

Georgia-Pacific

Georgia-Pacific

- Using Amazon S3, Georgia-Pacific developed a central data lake. This allowed an efficient ingest and analyze structured and unstructured data at scale.

Nasdaq

Nasdaq

- Nasdaq stores up to seven years of data in Amazon S3 Glacier for meeting industry regulation and compliance requirements. Moreover, the company can restore data while optimizing its long-term storage costs using AWS.

Sysco

Sysco

- For running analytics on its data and gain business insights, Sysco combines its data into a single data lake developed on Amazon S3 and Amazon S3 Glacier.

Nielsen

Nielsen

- Using Amazon S3, Nielsen developed a new, cloud-native local television rating platform capable of storing 30 petabytes of data. This also supports Amazon Redshift, AWS Lambda, and Amazon EMR.

Above we have understood the overview, features, and working of the Amazon S3. But, one question that will be striking in your mind should be “ How to get started with S3?” No worries! In the upcoming sections, we will learn about the steps to get started with Amazon S3.

Getting started with Amazon S3

Firstly, set up and log into your AWS account. As for using Amazon S3, you will require an AWS account. However, if you don’t have one, create one when you sign up for Amazon S3. No charges for creating an account.

Firstly, set up and log into your AWS account. As for using Amazon S3, you will require an AWS account. However, if you don’t have one, create one when you sign up for Amazon S3. No charges for creating an account.

Secondly, create a bucket. Here, the bucket is used for storing every object in Amazon S3. You must create an S3 bucket before you can store data in Amazon S3.

Secondly, create a bucket. Here, the bucket is used for storing every object in Amazon S3. You must create an S3 bucket before you can store data in Amazon S3.

For creating a bucket

- Firstly, sign in to the AWS Management Console. After that, open the Amazon S3 console. Use this, https://console.aws.amazon.com/s3/.

- Secondly, choose Create bucket. Then, the Create bucket page will open.

- Thirdly, enter a DNS-compliant name for your bucket in the Bucket name. However, the bucket name must:

- Firstly, be unique across all of Amazon S3.

- Secondly, must be between 3 and 63 characters long.

- Thirdly, do not have uppercase characters.

- Lastly, it must starts with a lowercase letter or number.

- After creating the bucket, you will not be able to change its name.

- Next, in the Region section, select the AWS Region where you want the bucket to reside.

- However, select a region that is close to you geographically for minimizing latency and costs and for addressing regulatory requirements. Objects stored in a Region will never leave unless you explicitly transfer them to another Region.

- After that, in Bucket settings for Block Public Access, keep the values set to the defaults.

- However, by default, Amazon S3 blocks all public access to your buckets. So, it is recommended that you keep all Block Public Access settings enabled.

- Lastly, choose Create bucket.

At last, start building with AWS. Now that you have learned how to create a bucket, it’s time to add an object to it. Here, an object can be any kind of file: a text file, a photo, a video, and so on.

At last, start building with AWS. Now that you have learned how to create a bucket, it’s time to add an object to it. Here, an object can be any kind of file: a text file, a photo, a video, and so on.

Final words

Above we have learned and understood the various services and features related to Amazon S3. This storage service provides techniques to store and retrieve any amount of data from anywhere. By using the working and features of S3, many big companies have migrated to these services. Related to that, Amazon also offers storage courses that you can use to learn about the highly available storage solutions built on Amazon S3. So, start exploring Amazon S3 services using the resources and get yourself advanced in it!