Exam DP-203: Data Engineering on Microsoft Azure

Candidates who are proficient in data processing languages should take the DP-203: Data Engineering on Microsoft Azure exam. SQL, Python, or Scala are all options. Parallel processing and data architecture patterns must be recognisable to them. They must also be capable of integrating, converting, and combining data from a variety of structured and unstructured data systems into a framework that can be used to construct analytics solutions.

However, passing this exam will help candidates in becoming Microsoft Certified: Azure Data Engineer Associate.

Azure Data Engineer: Role and Responsibilities

- Firstly, Azure Data Engineers help stakeholders in understanding the data through exploration.

- Secondly, they have the skills to build and maintain secure and compliant data processing pipelines by using different tools and techniques.

- Thirdly, they have familiarity with Azure data services and languages for storing and producing datasets for analysis.

- Fourthly, they ensure that data pipelines and data stores are high-performing, efficient, organized, and reliable.

- Lastly, they handle unanticipated issues swiftly and minimize data loss. And, they are also responsible for designing, implementing, monitoring, and optimizing data platforms for meeting the data pipeline’s needs.

Exam Learning Path

Microsoft provides access to its learning path designed according to the exam. These learning paths consist of topics that contain various modules with details about the concept. Candidates can explore these modules to understand the concepts. For the Microsoft DP-203 exam, the modules include:

- Firstly, Azure for the Data Engineer

- Secondly, storing data in Azure

- Thirdly, Data integration at scale with Azure Data Factory or Azure Synapse Pipeline

- Next, using Azure Synapse Analytics for integrated Analytical Solutions and working with data warehouses

- Then, performing data engineering using Azure Synapse Apache Spark Pools

- After that, Hybrid Transactional and Analytical Processing Solutions working process with using Azure Synapse Analytics

- Next, Data engineering with Azure Databricks

- Them, large-Scale Data Processing with Azure Data Lake Storage Gen2

- Lastly, implementing a Data Streaming Solution with Azure Streaming Analytics

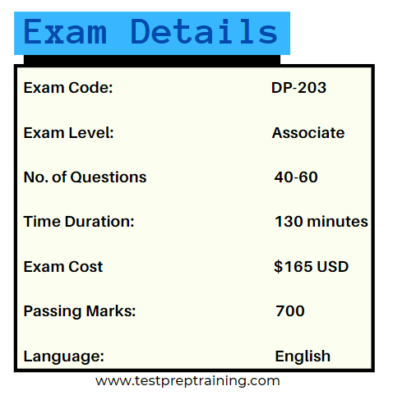

Microsoft DP-203 Exam Details

Microsoft DP-203 exam will have 40-60 questions that can be in a format like scenario-based single answer questions, multiple-choice questions, arranged in the correct sequence type questions, or drop type of questions. There will be a time limit of 130 minutes to complete the exam and the passing score is a minimum of 700. Further, the Microsoft DP-203 exam will cost $165 USD and the exam can be taken in only the English language.

Scheduling Exam

Microsoft DP-203 exam measures the ability to perform tasks like designing and implementing data storage with developing data processing. And monitoring and optimizing data storage and data processing. However, for scheduling the DP-203 exam, candidates can log in to their Microsoft account and fill in the details.

Microsoft DP-203 Exam Course Outline

Microsoft provides a course outline for the DP-203 exam that covers the major sections for getting better understanding during the preparation time. The topics are:

Topic 1: Design and Implement Data Storage (15-20%)

Implement a partition strategy

- Implement a partition strategy for files (Microsoft Documentation: Copy new files based on time partitioned file name using the Copy Data tool)

- Implement a partition strategy for analytical workloads (Microsoft Documentation: Best practices when using Delta Lake, Partitions in tabular models)

- Implement a partition strategy for streaming workloads

- Implement a partition strategy for Azure Synapse Analytics (Microsoft Documentation: Partitioning tables)

- identify when partitioning is needed in Azure Data Lake Storage Gen2

Design and implement the data exploration layer

- Create and execute queries by using a compute solution that leverages SQL serverless and Spark cluster (Microsoft Documentation: Azure Synapse SQL architecture, Azure Synapse Dedicated SQL Pool Connector for Apache Spark)

- Recommend and implement Azure Synapse Analytics database templates (Microsoft Documentation: Azure Synapse database templates, Lake database templates)

- Push new or updated data lineage to Microsoft Purview (Microsoft Documentation: Push Data Factory lineage data to Microsoft Purview)

- Browse and search metadata in Microsoft Purview Data Catalog

Topic 2: Develop Data Processing (40-45%)

Ingest and transform data

- Design and implement incremental loads

- transform data by using Apache Spark (Microsoft Documentation: Transform data in the cloud by using a Spark activity)

- Transform data by using Transact-SQL (T-SQL) in Azure Synapse Analytics (Microsoft Documentation: SQL Transformation)

- Ingest and transform data by using Azure Synapse Pipelines or Azure Data Factory (Microsoft Documentation: Pipelines and activities in Azure Data Factory and Azure Synapse Analytics)

- transform data by using Azure Stream Analytics (Microsoft Documentation: Transform data by using Azure Stream Analytics)

- cleanse data (Microsoft Documentation: Overview of Data Cleansing, Clean Missing Data module)

- Handle duplicate data (Microsoft Documentation: Handle duplicate data in Azure Data Explorer)

- Avoiding duplicate data by using Azure Stream Analytics Exactly Once Delivery

- Handle missing data

- Handle late-arriving data (Microsoft Documentation: Understand time handling in Azure Stream Analytics)

- split data (Microsoft Documentation: Split Data Overview, Split Data module)

- shred JSON

- encode and decode data (Microsoft Documentation: Encode and Decode SQL Server Identifiers)

- configure error handling for a transformation (Microsoft Documentation: Handle SQL truncation error rows in Data Factory, Troubleshoot mapping data flows in Azure Data Factory)

- normalize and denormalize data (Microsoft Documentation: Overview of Normalize Data module, What is Normalize Data?)

- perform data exploratory analysis (Microsoft Documentation: Query data in Azure Data Explorer Web UI)

Develop a batch processing solution

- Develop batch processing solutions by using Azure Data Lake Storage, Azure Databricks, Azure Synapse Analytics, and Azure Data Factory (Microsoft Documentation: Choose a batch processing technology in Azure, Batch processing)

- Use PolyBase to load data to a SQL pool (Microsoft Documentation: Design a PolyBase data loading strategy)

- Implement Azure Synapse Link and query the replicated data (Microsoft Documentation: Azure Synapse Link for Azure SQL Database)

- create data pipelines (Microsoft Documentation: Creating a pipeline, Build a data pipeline)

- scale resources (Microsoft Documentation: Create an automatic formula for scaling compute nodes)

- configure the batch size (Microsoft Documentation: Selecting VM size and image for compute nodes)

- Create tests for data pipelines (Microsoft Documentation: Run quality tests in your build pipeline by using Azure Pipelines)

- integrate Jupyter or Python notebooks into a data pipeline (Microsoft Documentation: Use Jupyter Notebooks in Azure Data Studio, Run Jupyter notebooks in your workspace)

- upsert data

- Reverse data to a previous state

- Configure exception handling (Microsoft Documentation: Azure Batch error handling and detection)

- configure batch retention (Microsoft Documentation: Azure Batch best practices)

- Read from and write to a delta lake (Microsoft Documentation: What is Delta Lake?)

Develop a stream processing solution

- Create a stream processing solution by using Stream Analytics and Azure Event Hubs (Microsoft Documentation: Stream processing with Azure Stream Analytics)

- process data by using Spark structured streaming (Microsoft Documentation: What is Structured Streaming? Apache Spark Structured Streaming)

- Create windowed aggregates (Microsoft Documentation: Stream Analytics windowing functions, Windowing functions)

- handle schema drift (Microsoft Documentation: Schema drift in mapping data flow)

- process time-series data (Microsoft Documentation: Time handling in Azure Stream Analytics, What is Time series solutions?)

- processing data across partitions (Microsoft Documentation: Data partitioning guidance, Data partitioning strategies)

- Process within one partition

- configure checkpoints and watermarking during processing (Microsoft Documentation: Checkpoint and replay concepts, Example of watermarks)

- scale resources (Microsoft Documentation: Streaming Units, Scale an Azure Stream Analytics job)

- Create tests for data pipelines

- optimize pipelines for analytical or transactional purposes (Microsoft Documentation: Query parallelization in Azure Stream Analytics, Optimize processing with Azure Stream Analytics using repartitioning)

- handle interruptions (Microsoft Documentation: Stream Analytics job reliability during service updates)

- Configure exception handling (Microsoft Documentation: Exceptions and Exception Handling)

- upsert data (Microsoft Documentation: Azure Stream Analytics output to Azure Cosmos DB)

- replay archived stream data (Microsoft Documentation: Checkpoint and replay concepts)

Manage batches and pipelines

- trigger batches (Microsoft Documentation: Trigger a Batch job using Azure Functions)

- handle failed batch loads (Microsoft Documentation: Check for pool and node errors)

- validate batch loads (Microsoft Documentation: Error checking for job and task)

- manage data pipelines in Data Factory or Azure Synapse Pipelines (Microsoft Documentation: Pipelines and activities in Azure Data Factory and Azure Synapse Analytics, What is Azure Data Factory?)

- schedule data pipelines in Data Factory or Synapse Pipelines (Microsoft Documentation: Pipelines and Activities in Azure Data Factory)

- implement version control for pipeline artifacts (Microsoft Documentation: Source control in Azure Data Factory)

- manage Spark jobs in a pipeline (Microsoft Documentation: Monitor a pipeline)

DP-203 Interview Questions

Topic 3: Secure, Monitor and Optimize Data Storage and Data Processing (30-35%)

Implement data security

- Implement data masking (Microsoft Documentation: Dynamic Data Masking)

- Encrypt data at rest and in motion (Microsoft Documentation: Azure Data Encryption at rest, Azure encryption overview)

- Implement row-level and column-level security (Microsoft Documentation: Row-Level Security, Column-level security)

- Implement Azure role-based access control (RBAC) (Microsoft Documentation: What is Azure role-based access control (Azure RBAC)?)

- Implement POSIX-like access control lists (ACLs) for Data Lake Storage Gen2 (Microsoft Documentation: Access control lists (ACLs) in Azure Data Lake Storage Gen2)

- Implement a data retention policy (Microsoft Documentation: Learn about retention policies and retention labels)

- Implement secure endpoints (private and public) (Microsoft Documentation: What is a private endpoint?)

- Implement resource tokens in Azure Databricks (Microsoft Documentation: Authentication for Azure Databricks automation)

- Load a DataFrame with sensitive information

- Write encrypted data to tables or Parquet files (Microsoft Documentation: Parquet format in Azure Data Factory and Azure Synapse Analytics)

- Manage sensitive information

Monitor data storage and data processing

- implement logging used by Azure Monitor (Microsoft Documentation: Overview of Azure Monitor Logs, Collecting custom logs with Log Analytics agent in Azure Monitor)

- configure monitoring services (Microsoft Documentation: Monitoring Azure resources with Azure Monitor, Define Enable VM insights)

- measure performance of data movement (Microsoft Documentation: Overview of Copy activity performance and scalability)

- monitor and update statistics about data across a system (Microsoft Documentation: Statistics in Synapse SQL, UPDATE STATISTICS)

- monitor data pipeline performance (Microsoft Documentation: Monitor and Alert Data Factory by using Azure Monitor)

- measure query performance (Microsoft Documentation: Query Performance Insight for Azure SQL Database)

- schedule and monitor pipeline tests (Microsoft Documentation: Monitor and manage Azure Data Factory pipelines by using the Azure portal and PowerShell)

- interpret Azure Monitor metrics and logs (Microsoft Documentation: Overview of Azure Monitor Metrics, Define Azure platform logs)

- Implement a pipeline alert strategy

Optimize and troubleshoot data storage and data processing

- compact small files (Microsoft Documentation: Explain Auto Optimize)

- handle skew in data (Microsoft Documentation: Resolve data-skew problems by using Azure Data Lake Tools for Visual Studio)

- Handle data spill

- optimize resource management

- tune queries by using indexers (Microsoft Documentation: Automatic tuning in Azure SQL Database and Azure SQL Managed Instance)

- tune queries by using cache (Microsoft Documentation: Performance tuning with a result set caching)

- troubleshoot a failed spark job (Microsoft Documentation: Troubleshoot Apache Spark by using Azure HDInsight, Troubleshoot a slow or failing job on an HDInsight cluster)

- troubleshoot a failed pipeline run, including activities executed in external services (Microsoft Documentation: Troubleshoot Azure Data Factory and Synapse pipelines)

For More: Check Exam DP-203: Data Engineering on Microsoft Azure FAQs

Exam Policies

Microsoft exam policies cover the exam-related details and information with providing the exam giving procedures. These exam policies consist of certain rules that have to be followed during exam time. However, some of the policies include:

Exam retake policy

- This states that candidates who will not be able to pass the exam for the first time must wait 24 hours before retaking the exam. During this time, they can go onto the certification dashboard and reschedule the exam. If this happens for the second time then, they have to wait for at least 14 days before retaking the exam. And, this 14-day waiting period is also inflicted between the third and fourth attempts and then, fourth and fifth attempts. However, candidates can only give any exam five times a year.

Exam reschedule and the cancellation policy

- Microsoft temporarily waives the reschedule and cancellation fee if candidates cancel their exams within 24 hours before the scheduled appointment. However, for rescheduling or canceling an appointment there is no charge if it is executed at least 6 business days prior to your appointment. But, if a candidate cancels or reschedules an exam within 5 business days of your registered exam time then, a fee will be applied.

DP-203 Interview Questions

Preparation Guide for Microsoft DP-203 Exam

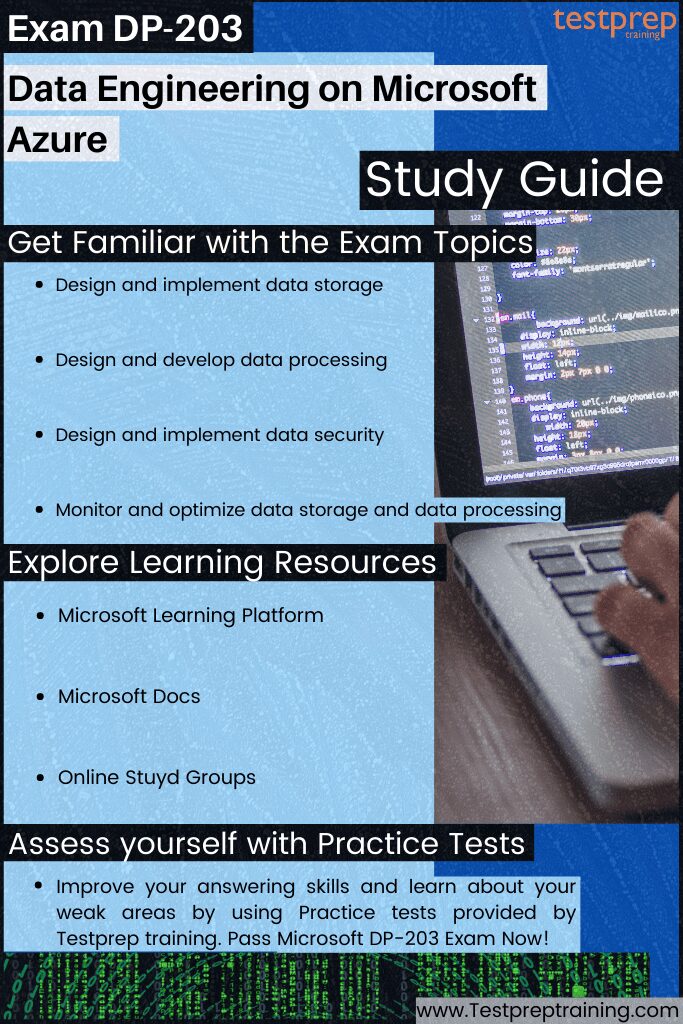

1. Getting Familiar with Exam objectives

For having a better preparation, Microsoft DP-203 exam objectives can be very helpful. As this will help to get familiar with the topics provided for the DP-203 exam. Candidates can go through the sections and subsections to learn about the pattern of the exam. However, for the DP-203 exam, the topics include:

- Firstly, designing and implementing data storage

- Secondly, designing and developing data processing

- Next, design and implement data security

- Lastly, monitoring and optimizing data storage and data processing

2. Microsoft Learning Platform

Microsoft offers learning platforms that cover various study resources to help candidates during exam preparation. For the DP-203 exam preparation, go through the Microsoft official website to get all the necessary information and the exam content outline.

3. Microsoft Docs

Microsoft documentation refers to the source of knowledge that works as a reference for all the topics in the DP-203 exam. This provides detailed information about the exam concepts by covering different scales of different Azure services. Moreover, this consists of modules that will help you gain a lot of knowledge about concepts according to the exam.

4. Online Study Groups

Candidates can take advantage of the online study groups during the exam preparation. That is to say, joining the study groups will help you to stay connected with the professionals who are already on this pathway. Moreover, you can discuss your query or the issue related to the exam in this group and even take the DP-203 exam study notes.

5. Practice Tests

By using DP-203 exam practice tests, you will know about your weak and strong areas. Moreover, you will be able to enhance your answering skills for manning time management thus saving a lot of time during the exam. However, the good way to start taking the DP-203 exam practice tests is after completing a full topic and then try the mock tests for that. As a result, your revision will get stronger and you will get a better understanding. So, find the best DP-203 practice exam tests and crack the exam.