The migration Process Google Professional Data Engineer GCP

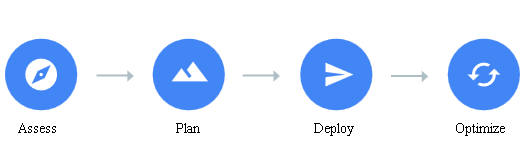

A migration is a journey and involves various phases with multiple options to reach destination. As per diagram

There are four phases of migration:

-

- Involves assessment and discovery of existing environment,

- understand app and environment inventory

- identify app dependencies and requirements

- perform TCO and app performance benchmarks.

-

- Create the basic cloud infrastructure for workloads

- Make plan how to move apps.

- Planning involves enlisting identity management, organization and project structure, networking, sorting apps, and a prioritized migration strategy.

-

- Design, implement and execute migration

- May refine cloud resources as per any need

- Optimize

- Analyze and optimize cloud resource utilization

- Reduce costs

- Implement Automation, ML and AI services

Assess Phase

- Build an inventory of apps – Use teams for each workload in current environment.

- The inventory should include

- apps

- Dependencies of each app

- Services supporting app infrastructure

- Servers configurations

- Network devices, firewalls, and other dedicated hardware.

- For each item gather

- Source code location

- Deployment method

- Network restrictions or security requirements.

- Licensing requirements

- Categorize apps

- Categorize to prioritize the apps to migrate first

- Also understand complexity and risk involved

- A catalog matrix is used for purpose

Transferring large datasets

For large datasets transfer involves various steps as

- building the right team

- planning early

- testing transfer plan before implementing

Data transfer

- Process of moving data without transforming

- It involves

- Making a transfer plan to decide transfer option and get approvals

- Coordinating team that executes the transfer

- Choosing the right transfer tool based on resources, cost, time

- Overcoming data transfer challenges, like insufficient bandwidth, moving actively used datasets, protecting and monitoring the data during transfer and ensuring successful transfer

- Other types of data transfer projects

- ETL transformation use Dataflow.

- To migrate a database and related apps use Cloud Spanner

- For virtual machine (VM) instance migration use Migrate for Compute Engine.

Step 1: Assembling team

Planning a transfer typically requires personnel with the following roles and responsibilities:

- Storage, IT, and network admins to execute transfer

- Data owners or governors, legal persons approval for transfer

Step 2: Collecting requirements and available resources

- Identify datasets to move.

- Use Data Catalog to organize data into logical groupings

- Work with teams to update these groupings.

- Identify datasets you can move.

- Any regulatory, security, or other factors prohibit transfer

- Remove sensitive data or reorganize data as needed. Use Dataflow or Cloud Data Fusion or Cloud Composer.

- For movable datasets decide where to transfer each dataset.

- Select storage option to store data.

- Understand data access policies to maintain after migration.

- Any region or geography specific requirement

- data structure in the destination

- transfer on an ongoing basis or one off

- For movable datasets also enlist following

- Time: When to transfer

- Cost: budget available

- People: Who will execute the transfer

- Bandwidth (for online transfers):

Step 3: Evaluating transfer options

Data transfer options are selected as per following factors

- Cost

- Time

- Offline versus online transfer options

- Transfer tools and technologies

- Security

Cost:

It includes

- Networking costs

- Egress charges if any

- bandwidth charges for transfer

- Storage and operation costs for Cloud Storage during and after the transfer of data

- Personnel costs for support

Time:

- Time involved for transfer

- when to undertake transfer

- Connection options for data transfer between private data center and GCP

- A public internet connection by using a public API

- Direct Peering by using a public API

- Cloud Interconnect by using a private API

Connecting with a public internet connection –

- Less predictable

- Dependent on ISP capacity

- low costs

- Google offers peering arrangements if applicable

Connecting with Direct Peering –

- Access GCP network with lesser network hops

- Direct Peering connects ISP network and Google’s Edge Points of Presence (PoPs)

- A registered Autonomous System (AS) Number need to be set up along with around-the-clock contact with network operations center.

Connecting with Cloud Interconnect –

- Cloud Interconnect is a direct connection to GCP by Cloud Interconnect service providers.

- No need to send data on the public internet

- more consistent throughput for large data transfers.

- SLAs for network availability and performance

Online versus offline transfer –

- Transfer data over a network, or by using storage hardware.

Deciding among Google’s transfer options

Factors to choose a transfer option

| Where you’re moving data from | Scenario | Suggested products |

| Another cloud provider (for example, Amazon Web Services or Microsoft Azure) to Google Cloud | — | Storage Transfer Service |

| Cloud Storage to Cloud Storage (two different buckets) | — | Storage Transfer Service |

| private data center to Google Cloud | Enough bandwidth to meet project deadline | |

| for less than a few TB of data | gsutil | |

| private data center to Google Cloud | Enough bandwidth to meet project deadline | |

| for more than a few TB of data | Storage Transfer Service for on-premises data | |

| private data center to Google Cloud | Not enough bandwidth to meet project deadline | Transfer Appliance |

gsutil

- suitable for smaller transfers of on-premises data (less than a few TB)

- include gsutil in default path if using Cloud Shell.

- By default provided with Cloud SDK.

- manages Cloud Storage instances,

- functions provided –

- copying data to and from the local file system and Cloud Storage.

- move and rename objects and

- perform real-time incremental syncs

- Use scenarios

- transfers as-needed basis, or in command-line sessions by users.

- If transfer few files or very large files, or both.

- consuming output of a program like streaming output to Cloud Storage

- if watching and syncing a directory with a fewer number of files

- For using gsutil, create a Cloud Storage bucket and copy data to it.

- For security use HTTPS

- For large datasets transfer

- use gsutil -m for multi-threaded transfers

- use Composite transfers for a single large file, it breaks large files into smaller chunks to increase transfer speed.

Storage Transfer Service

- for large transfers of on-premises data

- Designed for large-scale transfers (up to petabytes of data or billions of files).

- supports full copies or incremental copies,

- Offers graphical user interface

- Usage scenarios

- If sufficient bandwidth available to move the data volumes

- For large internal users who cannot use gsutil

- need error-reporting and a record of data moved.

- limit the impact of transfers on other workloads

- To run recurring transfers on a schedule.

- Install agents to use Storage Transfer Service on-premises

- Agents are in Docker containers and run or orchestrate them by Kubernetes.

- After setup start transfers by providing

- a source directory

- destination bucket

- time or schedule

- Storage Transfer Service recursively crawls subdirectories and files in the source directory and creates objects with a corresponding name in Cloud Storage

- Automatically re-attempts transfer if any transient errors

- You can monitor files moved and the overall transfer speed

- After transfer a tab-delimited file (TSV) file lists all files transferred and error messages

- Best Practices

- Use an identical agent setup on every machine.

- More agents results in more speed so deploy many agents

- Bandwidth caps can protect other workloads

- Plan time for reviewing errors.

- Set up Cloud Monitoring for long-running transfers.

Transfer Appliance –

- Used for larger transfers if limited network bandwidth or costly

- Usage scenarios:

- data at a remote location with limited / no bandwidth.

- Required bandwidth is not available

- Involves receiving and shipping back the hardware

- It is Google-owned hardware.

- Available only in specific countries.

- Factors for choosing it are cost and speed.

- Request a appliance in the Cloud Console detailing data to transfer

- Approximate turnaround time for a appliance to be shipped, loaded with data, shipped back, and rehydrated on Google Cloud is 50 days.

- cost for the 480 TB device process is less than $3,000.

Storage Transfer Service for cloud-to-cloud transfers –

- Storage Transfer Service is a fully managed and highly scalable data transfer service

- Automates transfers from other public clouds into Cloud Storage.

- supports transfers into Cloud Storage from Amazon S3 and HTTP.

- For Amazon S3,

- access key and an S3 bucket details are needed

- Daily copies of any modified objects is also supported

- Cannot transfer to Amazon S3.

- For HTTP, list of public URLs in a specified format are needed

- Script needed with size of each file in bytes, with Base64-encoded MD5 hash of the file contents.

Security

- Primary focus during transfer

- different levels of security offered by GCP

- consider protection of

- data at rest (authorization and access to the source and destination storage system),

- data in transit,

- access to the transfer product.

Security offered by product.

| Product | Data at rest | Data in transit | Access to transfer product |

| Transfer Appliance | All data is encrypted. | Protected with keys managed by the customer. | Anyone can order an appliance, but to use it they need access to the data source. |

| gsutil | Access keys required to access Cloud Storage, which is encrypted at rest. | Data is sent over HTTPS and encrypted in transit. | Anyone can download and run gsutil. They must have permissions to buckets and local files in order to move data. |

| Storage Transfer Service for on-premises data | Access keys required to access Cloud Storage, which is encrypted at rest. The agent process can access local files as OS permissions allow. | Data is sent over HTTPS and encrypted in transit. | You must have object editor permissions to access Cloud Storage buckets. |

| Storage Transfer Service | Access keys required for non-Google Cloud resources (for example, Amazon S3). Access keys are required to access Cloud Storage, which is encrypted at rest. | Data is sent over HTTPS and encrypted in transit. | You must have Cloud IAM permissions for the service account to access the source and object editor permissions for any Cloud Storage buckets. |

Step 4: Preparing for transfer

Steps involved are

- Pricing and ROI estimation.

- Functional testing. to confirm product set up and that network connectivity

- Confirmation of install and operation of the transfer.

- Enlist issues that block data movement

- List operations needed like training needed

- Performance testing. run a transfer on a large sample of data and confirm speed and fix bottlenecks

Step 5: Ensuring the integrity of transfer

- Enable versioning

- backup on destination to circumvent any accidental deletes.

- Validate data before removing the source data.