SnowPro Advanced: Data Engineer

The SnowPro Advanced: Data Engineer Certification validates your expertise and knowledge in integrating Snowflake, APIs, and on-premises data with comprehensive data engineering principles. Besides transforming, replicating, and sharing data across platforms, the exam also covers building end-to-end near-real-time streams. Candidates who are interested in becoming cloud data engineers should take the exam.

Recommended Knowledge and Experience

- 2+ years of data engineering experience, including practical experience using Snowflake for DE tasks

- Candidates should have a working knowledge of Restful APIs, SQL, semi-structured datasets, and cloud-native concepts.

- Programming experience is a plus.

Exam Format

- Exam Version: DEA-C01

- Total Number of Questions: 65

- Question Types: Multiple Select, Multiple Choice

- Time Limit: 115 minutes

- Languages: English

- Registration Fee: $375 USD

- Passing Score: 750 + Scaled Scoring from 0 – 1000

- Unscored Content: Exams may include unscored items to gather statistical information. These items are not identified on the form and do not affect your score, and additional time is factored in to account for this content.

- Prerequisites: SnowPro Core Certified

- Delivery Options: Online Proctoring and Onsite Testing Centers

For More Details See SnowPro Advanced: Data Engineer FAQs

Course Outline

The SnowPro Advanced: Data Engineer exam covers the following domains:

1.0 Domain: Data Movement

1.1 Given a data set, load data into Snowflake.

- Outline considerations for data loading

- Define data loading features and potential impact

1.2 Ingest data of various formats through the mechanics of Snowflake.

- Required data formats

- Outline Stages

1.3 Troubleshoot data ingestion.

1.4 Design, build, and troubleshoot continuous data pipelines.

- Design a data pipeline that forces uniqueness but is not unique

- Stages

- Tasks

- Streams

- Snowpipe

- Auto ingest as compared to Rest API

1.5 Analyze and differentiate types of data pipelines.

- Understand Snowpark architecture (client vs server)

- Create and deploy UDFs and Stored Procedures using Snowpark

- Design and use the Snowflake SLQ API

1.6 Install, configure, and use connectors to connect to Snowflake.

1.7 Design and build data-sharing solutions.

- Implement a data share

- Create a secure view

- Implement row-level filtering

1.8 Outline when to use External Tables and define how they work.

- Partitioning external tables

- Materialized views

- Partitioned data unloading

2.0 Domain: Performance Optimization

2.1 Troubleshoot underperforming queries.

- Identify underperforming queries

- Outline telemetry around the operation

- Increase efficiency

- Identify the root cause

2.2 Given a scenario, configure a solution for the best performance.

- Scale-out as compared to scale in

- Clustering as compared to increasing warehouse size

- Query complexity

- Micro partitions and the impact of clustering

- Materialized views

- Search optimization

2.3 Outline and use caching features.

2.4 Monitor continuous data pipelines.

- Snowpipe

- Stages

- Tasks

- Streams

3.0 Domain: Storage and Data Protection

3.1 Implement data recovery features in Snowflake.

- Time Travel

- Fail-safe

3.2 Outline the impact of Streams on Time Travel.

3.3 Use System Functions to analyze Micro-partitions.

- Clustering depth

- Cluster keys

3.4 Use Time Travel and Cloning to create new development environments.

- Backup databases

- Test changes before deployment

- Rollback

4.0 Domain: Security

4.1 Outline Snowflake security principles.

- Authentication methods (Single Sign On (SSO), Key Authentication, Username/Password, Multi-factor Authentication (MFA))

- Role Based Access Control (RBAC)

- Column Level Security and how data masking works with RBAC to secure sensitive data

4.2 Outline the system-defined roles and when they should be applied.

- The purpose of each of the system-defined roles including best practices used in each case

- The primary differences between SECURITYADMIN and USERADMIN roles

- The difference between the purpose and usage of the USERADMIN/SECURITYADMIN roles and SYSADMIN

4.3 Manage Data Governance.

- Explain the options available to support column-level security including Dynamic Data Masking and External Tokenization

- Explain the options available to support row-level security using Snowflake Row Access Policies

- Use DDL required to manage Dynamic Data Masking and Row Access Policies

- Use methods and best practices for creating and applying masking policies on data

- Use methods and best practices for Object Tagging

5.0 Domain: Data Transformation

5.1 Define User-Defined Functions (UDFs) and outline how to use them.

- Secure UDFs

- SQL UDFs

- JavaScript UDFs

- Returning table value compared to a scalar value

5.2 Define and create External Functions.

- Secure external functions

5.3 Design, build, and leverage Stored Procedures.

- Transaction management

5.4 Handle and transform semi-structured data.

- Traverse and transform semi-structured data to structured data

- Transform structured to semi-structured data

5.5 Use Snowpark for data transformation.

- Query and filter data using the Snowpark library

- Perform data transformations using Snowpark (ie., aggregations)

- Join Snowpark dataframes

Preparation Guide for SnowPro Advanced: Data Engineer Exam

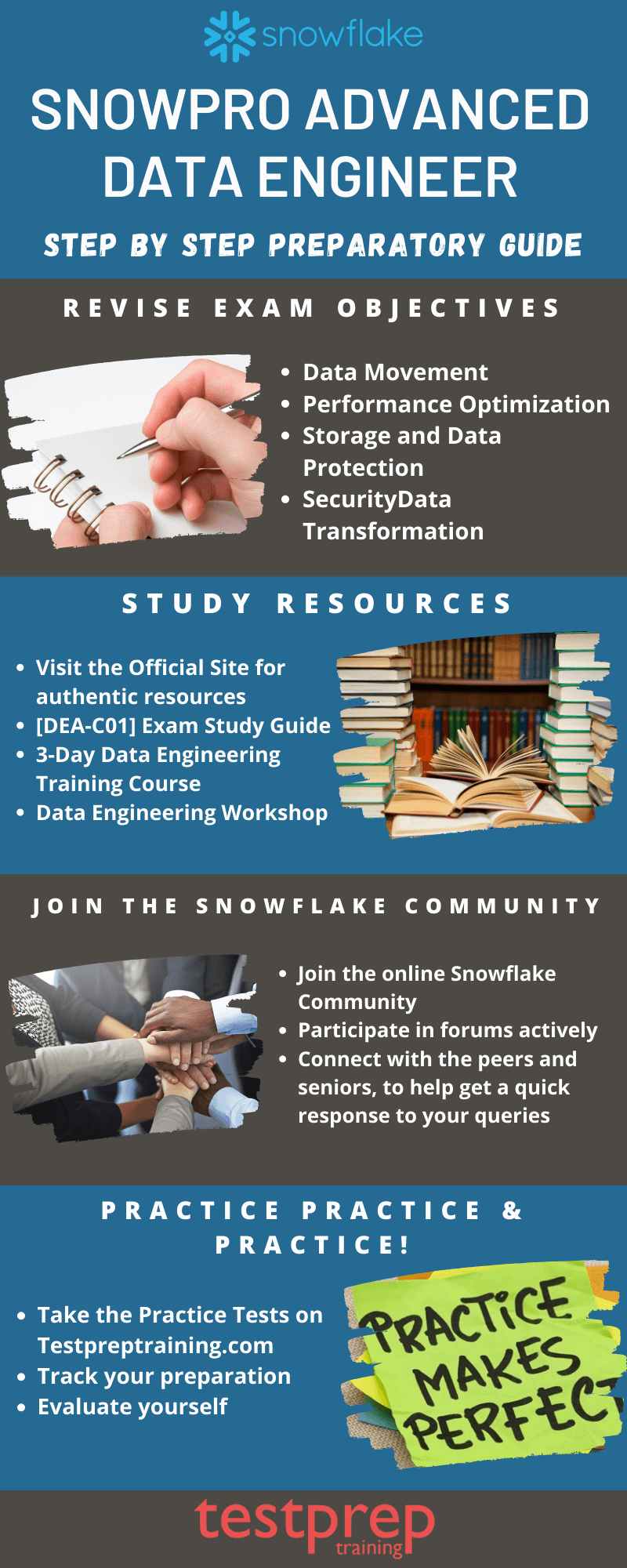

[DEA-C01] Exam Study Guide

It contains a detailed breakdown of the test domains, weightings, objectives, exam topics, links to Snowflake documentation for each topic, and additional resources, including videos, documents, blogs, and exercises, that will assist you in preparing for the SnowPro Advanced: Data Engineer certification exam. However, it must be noted that this Study Guide does not cover all the content on the SnowPro Advanced: Data Engineer examination and cannot guarantee success on the exam.

Official Training

Designed for key stakeholders who are accessing, querying datasets for analytic tasks, and building data pipelines into Snowflake, this 3-day course covers the Snowflake key concepts, features, considerations, and best practices. Snowflake Database application developers and data engineers are typically these stakeholders. This course is inclusive of lectures, demos, labs, and discussions.

Badge 5: Data Engineering Workshop

In the Data Engineering Workshop (DNGW), participants explore the role of Data Engineers and their contribution to Data-Driven organizations, as well as date and time data types, tasks, pipes, and merging statements. In this workshop, you will engage in a high degree of interaction by participating in reflection questions, hands-on lab work, and automated lab checks. It has a light-hearted tone, is scenario-driven, and is rich in metaphors.

Join Snowflake Community

With the Snowflake Community, you can learn more about the Snowflake Cloud Data Platform, submit and manage support cases, interact with peers, hear best practices from Snowflake experts, attend in-person meetups and events, share your knowledge, and stay connected to everyone using Snowflake.

Evaluate yourself with Practice Tests

By analyzing yourself with SnowPro Advanced: Data Engineer practice exams, you will be able to identify your weak points and improve them, along with determining your strong and weak areas. Moreover, you will be able to improve your answering skills, which is going to save you a huge amount of time. So Start Practicing Now!