Service tiers in the DTU-based purchase model for Sizing

AZ-304: Microsoft Azure Architect Design is retired. AZ-305 replacement is available.

In this, we’ll understand and learn about the service tiers in the DTU purchase model by a range of computing sizes with a fixed amount of included storage, the retention period for backups, and price. You will know that all service tiers in the DTU-based purchase model provide the flexibility of changing compute sizes with minimal downtime.

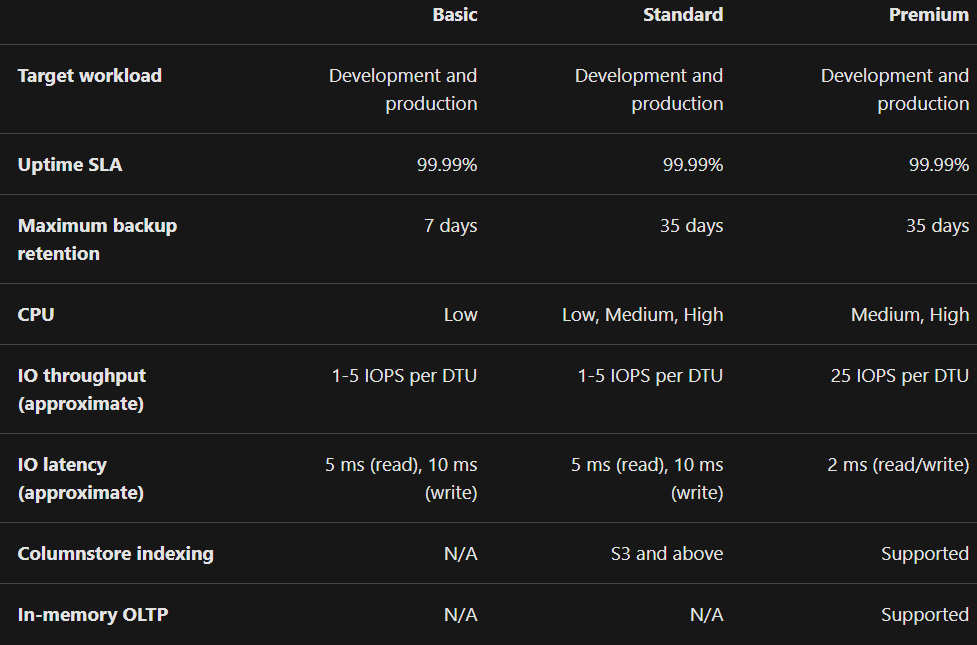

Comparing the DTU-based service tiers

Choosing a service tier depends on business continuity, storage, and performance requirements.

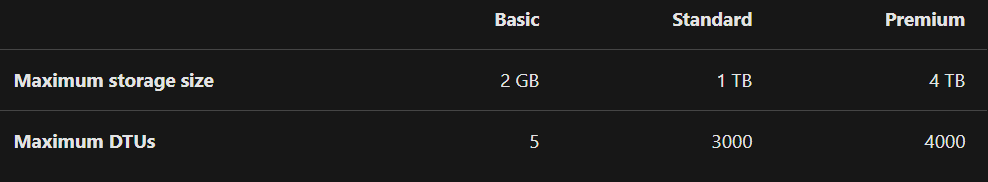

Single database DTU and storage limits

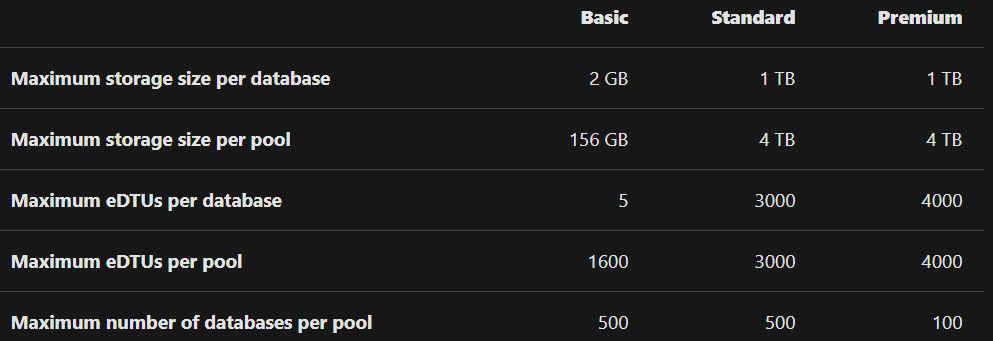

Compute sizes are Database Transaction Units for single databases and elastic Database Transaction Units for elastic pools.

Elastic pool eDTU, storage, and pooled database limits

DTU Benchmark

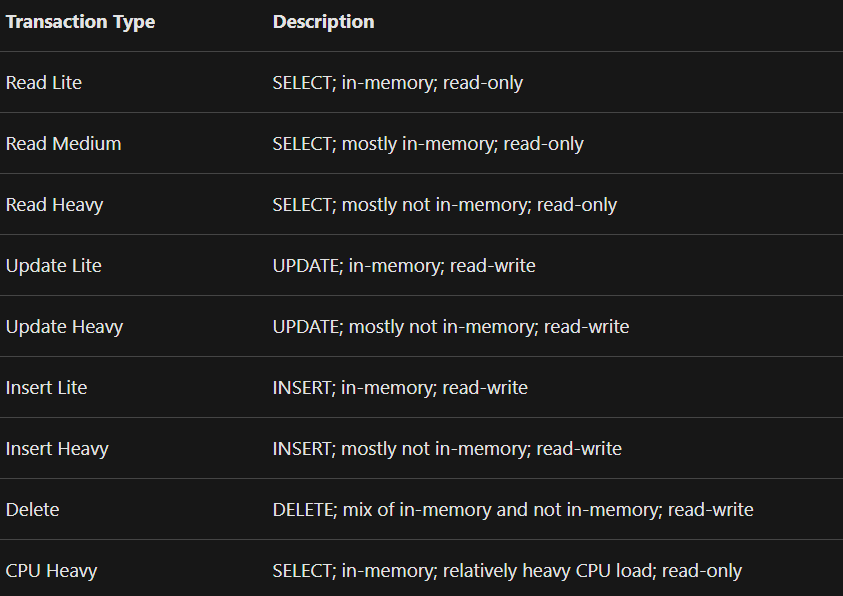

Physical characteristics (CPU, memory, IO) are in association with each DTU measure calibration is using a benchmark that simulates real-world database workload.

Correlating benchmark results to real world database performance

You should understand that all benchmarks are representative and indicative only. However, the transaction rates achieved with the benchmark application will not be the same as those that might be achieved with other applications. The benchmark comprises a collection of different transaction types running against a schema containing a range of tables and data types. But, the goal of the benchmark is to provide a reasonable guide for the relative performance of a database while scaling up or down between compute sizes.

Schema

The schema provides variety and complexity for supporting a broad range of operations. However, the benchmark runs against a database of six tables in which these tables fall into three categories such as fixed-size, scaling, and growing. And, there are two fixed-size tables, three scaling tables, and one growing table. In which, the Fixed-size tables have a constant number of rows, scaling tables have a cardinality that is proportional to database performance. Lastly, the growing table is sized like a scaling table on initial load. But, the cardinality changes in the course of running the benchmark as rows are inserted and deleted.

Further, the schema includes a mix of data types, including integer, numeric, character, and date/time including primary and secondary keys, but not any foreign keys.

Transactions

There are nine transactions in workloads that are designed for highlighting a particular set of system characteristics in the database engine and system hardware.

Users and pacing

The benchmark workload refers to a tool that submits transactions across a set of connections for simulating the behavior of a number of concurrent users. However, all of the connections and transaction generation is through machines. So, for simplicity, we refer to these connections as “users.” And, each user operates independently of all other users, all users perform the same cycle of steps shown below:

- Firstly, establishing a database connection.

- Secondly, selecting a transaction at random (from a weighted distribution).

- Thirdly, perform selected transactions and measure the response time.

- Then, waiting for a pacing delay.

- After that, closing the database connection.

- Lastly, Exit.

Scaling rules

The measurement of the number of users is on the basis of the database size. However, there is one user for every five scale-factor units. This is because of the pacing delay, one user can generate at most one transaction per second, on average.

Measurement duration

There is a requirement of a steady-state measurement duration of at least one hour for a valid benchmark.

Metrics

The key metrics are throughput and response time in the benchmark.

- Firstly, the throughput is the essential performance measure in the benchmark. This is reported in transactions per unit-of-time, counting all transaction types.

- Secondly, the response time is a measure of performance predictability. This constraint varies with the class of service as well as with higher classes of service having a more stringent response time requirement, as shown below.

Reference: Microsoft Documentation