Process and analyze Google Professional Data Engineer GCP

- Processing and transformation is needed

- The processing framework can analyze data directly or prepare the data for downstream analysis

- Steps usually involved

- Processing: Data from source is cleansed, normalized, and processed

- Analysis: Processed data is stored for querying and exploration.

- Understanding: Based on analytical results, data is used to train and test automated machine-learning models.

Processing large-scale data

- Large-scale data processing with data source from Cloud Storage, Bigtable, or Cloud SQL

- For large to fit data frameworks manage distributed compute clusters

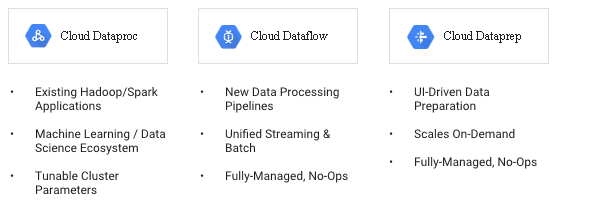

Dataproc

- A managed Apache Hadoop and Apache Spark service

- Hadoop and Spark are popular and supported by Dataproc

- No infrastructure needed to run Hadoop or Spark jobs in Dataproc

- Migrate Hadoop or Spark deployments to Dataproc

- Dataproc automates cluster creation, simplifies configuration and management of cluster

- Has built-in monitoring and utilization reports

- Use cases.

- Log processing

- Reporting

- On-demand Spark clusters

- Machine learning

- Simplifies operational activities like installing software or resizing a cluster.

- Also access, read data and write results in Cloud Storage, Bigtable, or BigQuery, or HDFS Can store and checkpoint data externally

Dataflow

- A serverless, fully managed batch and stream processing service

- Designed to simplify big data for both streaming and batch workloads.

- No need to specify a cluster size and manage capacity,

- Dataflow has on-demand resources are created, autoscaled, and parallelized.

- A true zero-ops service

- straggler workers addressed by constant monitoring, identifying, and rescheduling work, including splits, to idle workers across the cluster.

- Use-cases.

- MapReduce replacement

- User analytics

- Data science

- ETL

- Log processing

- The Dataflow SDK released as Apache Beam

- Supports execution on Apache Spark and Apache Flink.

Dataprep by Trifacta

- A visual data exploration, cleaning, and processing service

- A service for visually exploring, cleaning, and preparing data for analysis.

- Use Dataprep by a browser based-UI, without coding.

- Automatically deploys and manages the resources required.

- Transform data in CSV, JSON, or relational-table formats.

- It uses Dataflow to scale automatically and can handle terabyte-scale datasets.

- Can export clean data to BigQuery

- Manage user access and data security with Cloud IAM

- Use cases

- Machine Learning: You can clean training data for fine-tuning ML models.

- Analytics: You can transform raw data so that it can be ingested into data warehousing tools such as BigQuery.

Analyzing and querying data

For data analysis and query, tools are listed.

BigQuery

- A managed data warehouse service

- Supports ad-hoc SQL queries and complex schemas.

- Use standard SQL queries or BI and visualization tools

- It is a highly-scalable, highly-distributed, low-cost analytics OLAP data warehouse

- Can have a scan rate of over 1 TB/sec.

- To start, create a dataset in project, load data into a table, and execute a query.

- Data loading from Pub/Sub, Dataflow, Cloud Storage, or output from Dataflow or Dataproc. Can also import CSV, Avro and JSON data formats

- Supports nested and repeated items in JSON.

- All data accessed (at rest or in motion) are encrypted.

- Covered under Google’s compliance programs

- Access to data controlled by ACLs.

- Billing based on: queries and storage.

- Querying data under pricing models: on-demand or flat-rate.

- on-demand pricing – charges as per terabytes processed.

- flat-rate pricing – consistent query capacity with a simpler cost model.

- BigQuery automates infrastructure maintenance windows and data vacuuming.

- query plan explanation is used in design of queries

- Data stored in a columnar format, optimized for large-scale aggregations and data processing.

- Built-in support for time-series partitioning of data.

- Run queries by standard SQL, with support for querying nested and repeated data.

- built-in functions and operators also provided.

- Use cases

- User analysis

- Device and operational metrics

- Business intelligence

Understanding data with machine learning

- Machine learning is now crucial in analysis phase of the data lifecycle.

- Used to augment processed results

- suggest data-collection optimizations

- predict outcomes in data sets.

- Use cases.

- Product recommendations

- Prediction

- Automated assistants

- Sentiment analysis

- ML Options

- Task-specific machine learning APIs

- Custom machine learning

The Vision API

- Used to analyze and understand the content of an image

- Uses pre-trained neural networks.

- Can classify images, detect individual objects and faces, and recognize printed words.

- Can detect inappropriate content.

- API is accessible through REST endpoints.

- Send images directly or upload to Cloud Storage and send link to image in the request. Requests can have single or multiple images

- Feature annotations can be selected

- Feature detection includes labels, text, faces, landmarks, logos, safe search, and image properties (like dominant colors).

- Response has metadata about each feature type annotation selected for each supplied in the original request.

- Integrate with other GCP services

Speech-to-Text

- Used to analyze audio and convert it to text.

- Recognizes more than 80 languages and variants

- Powered by deep-learning neural-network algorithms which constantly evolve and improve.

- Use cases

- Real-time speech-to-text

- Batch analysis

Natural Language API

- Used to analyze and reveal the structure and meaning of text.

- Can extract information about people, places, events, sentiment of the input text, etc.

- Results used to filter inappropriate content, classify content by topics, or build relationships

- Combine with the Vision API for OCR capabilities or the Speech-to-Text features

- API is available through REST endpoints.

- Send text directly to the service, or upload to Cloud Storage and send text file link in request.

Cloud Translation

- To translate more than 90 different languages.

- It automatically detects the input language, with high accuracy.

- Provide real-time translation for web and mobile apps

- supports batched requests for analytical workloads.

- Available through REST endpoints.

- Integrate the API with GCP services

Video Intelligence API: Video search and discover

- Used to search, discover, and extract metadata from videos.

- Has an easy-to-use REST API

- Can detect entities (nouns) in video content

- Also look for entities in scenes in the video content.

- Can annotate videos with frame-level and video-level metadata.

- Supports common video formats, like MOV, MPEG4, MP4, and AVI.

- Create a JSON request file with the location of the video and annotation types to perform, and submit to the API endpoint.

- Use cases

- Extract insights from videos

- Video catalog search

AI Platform

- A managed machine learning platform

- Used to run custom machine learning models at scale.

- Create models using the TensorFlow framework, and use AI Platform to manage preprocessing, training, and prediction.

- It is integrated with Dataflow for data pre-processing

- Can access data stored in Cloud Storage or BigQuery.

- Also works with Cloud Load Balancing

- Develop and test TensorFlow models

- Uses Datalab and Jupyter notebooks

- Models are completely portable.

- AI Platform workflow

- Preprocessing: Involves training, evaluation, and test data

- Graph building: convert supplied TensorFlow model into an AI Platform model with operations for training, evaluation, and prediction.

- Training: Continuously iterates and evaluates the model according to submitted parameters.

- Prediction: Use the model to perform computations in batches or on-demand

General-purpose machine learning

- Can deploy other high-scale ML tools like Mahout and MLlib

- Offers ML algorithms for clustering, classification, collaborative filtering, and more.

- Use Dataproc to deploy managed Hadoop and Spark clusters,

- Run and scale ML workloads

Google Professional Data Engineer (GCP) Free Practice TestTake a Quiz