Enabling containers for using Azure Virtual Network Capabilities

In this tutorial, we will learn about Azure virtual network capabilities for containers.

However, the Azure Virtual Network container network interface (CNI) plug-in installs in an Azure Virtual Machine. Then, the plug-in assigns IP addresses from a virtual network to containers brought up in the virtual machine, attaching them to the virtual network, and connecting them directly to other containers and virtual network resources. But, the plug-in doesn’t depend on overlay networks, or routes, for connectivity, and provides the same performance as virtual machines.

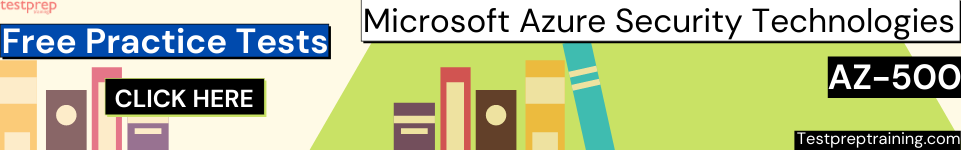

At a high level, the plug-in provides the following capabilities:

- Firstly, there is assigning of virtual network IP addresses to every Pod, which could consist of one or more containers.

- Secondly, pods can connect to peered virtual networks and to on-premises over ExpressRoute or a site-to-site VPN.

- Thirdly, pods can access services such as Azure Storage and Azure SQL Database. And, virtual network service endpoints protect these services.

- Fourthly, network security groups and routes can apply directly to Pods.

- Next, the pods can be placed directly behind an Azure internal or public Load Balancer, just like virtual machines

- Lastly, works seamlessly with Kubernetes resources such as Services, Ingress controllers, and Kube DNS.

The picture below explains how the plug-in provides Azure Virtual Network capabilities to Pods:

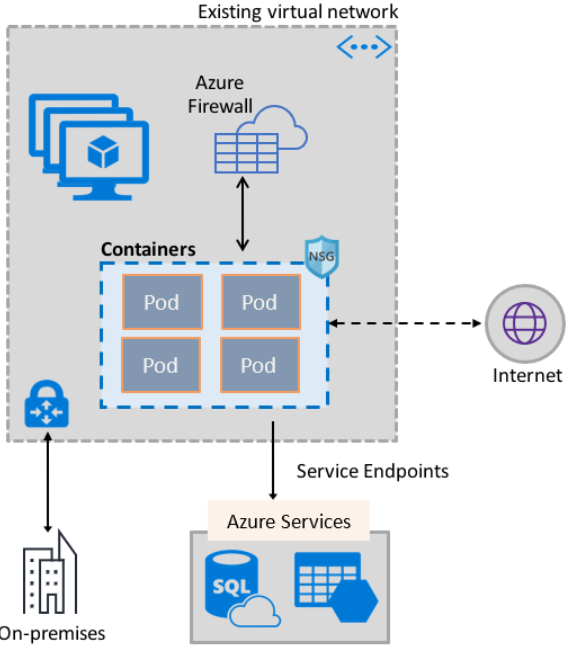

Connecting Pods to a virtual network

Pods are brought up in a virtual machine that is eventually a part of a virtual network. However, a pool of IP addresses for the Pods configuration is as secondary addresses on a virtual machine’s network interface. In this, Azure CNI sets up the basic Network connectivity for Pods and manages the utilization of the IP addresses in the pool. And, when a Pod comes up in the virtual machine, Azure CNI assigns an available IP address from the pool and connects the Pod to a software bridge in the virtual machine. Lastly, when the Pod terminates, the IP address is added back to the pool.

The picture below explains how Pods connect to a virtual network:

Internet access

For enabling Pods to access the internet, the plug-in configures iptables rules to network address translation (NAT) the internet bound traffic from Pods. However, the source IP address of the packet translation to the primary IP address on the virtual machine’s network interface. And, the Windows virtual machines automatically source NAT (SNAT) traffic destined to IP addresses outside the subnet the virtual machine is in.

Using the plug-in

The plug-in can be useful in the several ways, for providing basic virtual network attach for Pods or Docker containers:

- Firstly, like Azure Kubernetes Service. This means that the plug-in is integrated into the Azure Kubernetes Service (AKS), and can be used by choosing the Advanced Networking option.

- Secondly, as AKS-Engine. This refers to a tool that generates an Azure Resource Manager template for the deployment of a Kubernetes cluster in Azure.

- Thirdly, for creating your own Kubernetes cluster in Azure. The plug-in can be useful for providing basic networking for Pods in Kubernetes clusters that you deploy yourself, without relying on AKS, or tools like the AKS-Engine.

- Lastly, as Virtual network attaches for Docker containers in Azure. The plug-in can be useful in cases where you don’t want to create a Kubernetes cluster and would like to create Docker containers with virtual network attachments, in virtual machines.

Reference: Microsoft Documentation