Explain the Durability and Availability Characteristics for the Collection Approach

AWS Big Data Exam updated to AWS Certified Data Analytics Specialty.

Amazon Kinesis Data Streams manages the infrastructure, storage, networking, and configuration needed to stream your data at the level of your data throughput. You do not have to worry about provisioning, deployment, ongoing-maintenance of hardware, software, or other services for your data streams. In addition, Amazon Kinesis Data Streams synchronously replicates data across three availability zones, providing high availability and data durability.

Probably of most paramount concern is how Kinesis performs in production. One thing to keep in mind when looking at these numbers is that Kinesis’ durability characteristic is highly relevant. When injecting a record to a stream, that record is synchronously replicated to three different availability zones in the region to help guarantee that you’ll get it out of the other side. There is a performance cost associated with this level of reliability, and comparing to a single-node system like Redis (for example), would be nonsense.

Amazon ensures availability of a Kinesis stream by writing the stream data to three availability zones in a region. So, if an availability zone goes down, the data can still be reachable from other regions. But, if the Kinesis service in the whole region goes down, that may cause some trouble! For major disasters like this, the data sent to a stream can be replicated to another stream in a different region. Unfortunately, Amazon does not provide a configuration for multi-region replication of a stream. So, to do this, you will need to get your hands dirty.

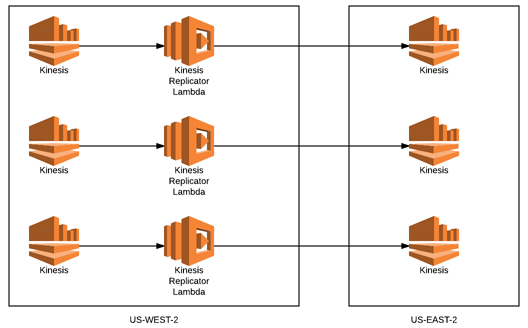

Multi-region replication of a stream can be done through the following ways:

- Using a KCL application as the replicator: In this solution, an application that uses the KCL library can be implemented and deployed to an EC2 instance. The application can get recent records from the source stream and after filtering the records that need to be replicated, it can write the records to the backup region.

- Using a Lambda function as the replicator: In this solution, the Lambda function can read the data from the stream and write it to our backup stream, which is available in another region. To get the data from a stream, a Kinesis trigger can be defined with a given batch size. The Lambda service can check the stream once per second and if it sees that new data is available in the stream, it can get the new records and call the Lambda function. At this step, the function can look for the number of new records available in the stream. If the number is smaller than the given batch size, the function gets all of the new records. If the number is bigger, the function limits the number of records to the given batch size. After the records are received and filtered, they can be written to the stream in the backup region. Note that as Amazon Kinesis is a stream-based trigger, you will have the number of shards per stream Lambda functions running in parallel.

Monitoring of replication is an important part of the implementation. Delay occurred during the replication must be observed at the replicator. This delay must be small as the records that are lost need to be minimized during a disaster. Also, the whole replication process needs to be monitored. To do this, a test stream can be used. During the test, a Lambda function can write artificial records to this stream in the primary region and check if these records can be read from the backup region. Using this method, the whole Kinesis replication process can be checked if it works as expected or not.

All in all, multi-region replication of Kinesis Streams decreases data losses during region wide disaster cases and increase your availability.

Link for free practice test – https://www.testpreptraining.com/aws-certified-big-data-specialty-free-practice-test