Determine How to Design and Architect the Analytical Solution

Analyze the Business Problem

Look at the business problem objectively

- identify whether it is a problem or not?

- Sheer volume or cost may not be the deciding factor.

- Multiple criteria like velocity, variety, challenges with the current system and time taken for processing should be considered as well.

Some Common Use Cases:

- Data Archival/ Data Offload – Archiving data to tapes for storing huge amounts of data spanning across years (active data) at a very low cost.

- Process Offload – Offload jobs that consume expensive MIPS cycles or consume extensive CPU cycles on the current systems.

- Data Lake Implementation–Help in storing and processing massive amounts of data.

- Unstructured Data Processing –Capabilities to store and process any amount of unstructured data natively.

- Data Warehouse Modernization – Integrate the capabilities of Big Data and data warehouse to increase operational efficiency.

Capacity Planning

Capacity planning plays a pivotal role in hardware and infrastructure sizing. Important factors to be considered are:

- Data volume for one-time historical load

- Daily data ingestion volume

- Retention period of data

- HDFS Replication factor based on criticality of data

- Time period for which the cluster is sized (typically 6months -1 year), after which the cluster is scaled horizontally based on requirements

- Multi datacenter deployment

Hindsight, Insight, or Foresight

Hindsight, insight, and foresight are three questions that come to mind when dealing with data; to know what happened, to understand what happened, and to predict what will happen. Hindsight is possible with aggregations and applied statistics. You can aggregate data by different groups and compare those results using statistical techniques, such as confidence intervals and statistical tests. A key component is data visualization that will show related data in context2.

Insight and foresight would require machine learning and data mining. This includes finding patterns, modeling current behavior, predicting future outcomes, and detecting anomalies. Refer to data science and machine learning tools (e.g. R, Apache Spark MLLib, WSO2 Machine Learner, GraphLab) for a deeper understanding.

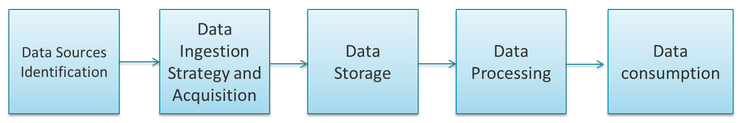

Steps in design and architect of analytical solution.

Source Profiling

- Most important step in deciding the architecture.

- It involves

- identifying the different source systems

- categorizing them based on their nature and type.

Important considerations

- Identify the internal and external sources systems

- High-Level assumption for the amount of data ingested from each source

- Identify the mechanism used to get data – push or pull

- Determine the type of data source – Database, File, web service, streams etc.

- Determine the type of data – structured, semi-structured or unstructured

Ingestion Strategy and Acquisition

Important considerations

- Determine the frequency at which data would be ingested from each source

- Is there a need to change the semantics of the data append replace etc?

- Is there any data validation or transformation required before ingestion (Pre-processing)?

- Segregate the data sources based on mode of ingestion – Batch or real-time

Storage

Storage requirements

- able to store large amounts of data

- store any type of data

- able to scale on need basis

- number of IOPS (Input output operations per second) that it can provide.

2 types of analytical requirements

- Synchronous – Data is analyzed in real-time or near real-time, the storage should be optimized for low latency.

- Asynchronous – Data is captured, recorded and analyzed in batch.

Important considerations

- Type of data (Historical or Incremental)

- Format of data ( structured, semi-structured and unstructured)

- Compression requirements

- Frequency of incoming data

- Query pattern on the data

- Consumers of the data

Processing

- Earlier data was stored in RAMs, but due to the volume, it is been stored on multiple disks

- Processing now is taken closer to data to reduce network I/O.

- Processing methodology is driven by business requirements

- It can be categorized as per SLA, into

- Batch

- real-time

- Hybrid

Batch Processing –

- Collecting the input for a specified interval of time

- running transformations on it in a scheduled way.

- Historical data load is a typical batch operation

- Technology Used: MapReduce, Hive, Pig

Real-time Processing

involves running transformations as and when data is acquired.

Technology Used: Impala, Spark, spark SQL, Tez, Apache Drill

Hybrid Processing –

- Combination of batch and real-time processing needs.

- Example – lambda architecture.

Consuming Data

- Involves consuming the output provided by processing layer.

- Different users, consume data in different format.

Data consumption forms

- Export Datasets – Requirements for third-party dataset generation. Data sets generated using hive export or directly from HDFS for big data applications.

- Reporting and visualization –reporting and visualization tool scan and connect to Hadoop or database service.

- Data Exploration – Data scientist build models and perform deep exploration in a sandbox environment. Sandbox can be a separate cluster or a separate schema within the same cluster that contains a subset of actual data.

- Adhoc Querying – Adhoc or Interactive querying can be supported by using Hive, Impala or spark SQL.

Important considerations

- Dynamics of use case: There a number of

scenarios which needs to be considered while designing the architecture, which

are

- form and frequency of data

- Type of data

- Type of processing and analytics required.

Myriad of technologies: Multiple technologies offering similar features and claiming to be better than the others.