Determine AWS Data Access and Retrieval Patterns

AWS Big Data Exam updated to AWS Certified Data Analytics Specialty.

AWS Data Access Selection

AWS data access depends upon requirement and

| If You Need | Consider Using |

| Persistent local storage for Amazon EC2, for relational and NoSQL databases, data warehousing, enterprise applications, Big Data processing, or backup and recovery | Amazon Elastic Block Store (Amazon EBS) |

| A simple, scalable, elastic file system for Linux-based workloads for use with AWS Cloud services and on-premises resources. It is built to scale on demand to petabytes without disrupting applications, growing and shrinking automatically as you add and remove files, so your applications have the storage they need – when they need it. | Amazon Elastic File System (Amazon EFS) |

| A fully managed file system that is optimized for compute-intensive workloads, such as high performance computing, machine learning, and media data processing workflows, and is seamlessly integrated with Amazon S3 | Amazon FSx for Lustre |

| A fully managed native Microsoft Windows file system built on Windows Server so you can easily move your Windows-based applications that require file storage to AWS, including full support for the SMB protocol and Windows NTFS, Active Directory (AD) integration, and Distributed File System (DFS). | Amazon FSx for Windows File Server |

| A scalable, durable platform to make AWS data accessible from any Internet location, for user-generated content, active archive, serverless computing, Big Data storage or backup and recovery | Amazon Simple Storage Service (Amazon S3) |

| Highly affordable long-term storage that can replace tape for archive and regulatory compliance | Amazon Glacier |

| A hybrid storage cloud augmenting your on-premises environment with Amazon cloud storage, for bursting, tiering or migration | AWS Storage Gateway |

| A portfolio of services to help simplify and accelerate moving data of all types and sizes into and out of the AWS cloud | Cloud Data Migration Services |

| A fully managed backup service that makes it easy to centralize and automate the back up of data across AWS services in the cloud as well as on premises using the AWS Storage Gateway. | AWS Backup |

Data Design Patterns

In data modeling, following terminology, is used

- 1:1 modeling: One-to-one relationship modeling using a partition key as the primary key.

- 1:M modeling: One-to-many relationship modeling using a partition key and a sort key as the primary key.

- N:M modeling: Many-to-many relationship modeling using a partition key and a sort key as the primary key with a table and a global secondary index.

One-to-one (data model)

A one-to-one connection is a sort of cardinality that describes the relationship between two entities A and B in which one element of A may only be linked to one element of B and vice versa. There is a bijective function from point A to point B in mathematics. Consider A to be the set of all human people, and B to be the set of all human brains. Any individual from A can and must have only one brain from B, and any human brain in B can and must belong to only one individual from A.

In a relational database, a one-to-one relationship exists when one row in a table may be linked with only one row in another table and vice versa. It is important to note that a one-to-one relationship is not a property of the data, but rather of the relationship itself. A list of mothers and their children may happen to describe mothers with only one child, in which case one row of the mothers table will refer to only one row of the children table and vice versa, but the relationship itself is not one-to-one, because mothers may have more than one child, thus forming a one-to-many relationship.

One-to-many

one-to-many relationship is a type of cardinality that refers to the relationship between two entities (see also entity–relationship model) A and B in which an element of A may be linked to many elements of B, but a member of B is linked to only one element of A. For instance, think of A as books, and B as pages. A book can have many pages, but a page can only be in one book.

In a relational database, a one-to-many relationship exists when one row in table A may be linked with many rows in table B, but one row in table B is linked to only one row in table A. It is important to note that a one-to-many relationship is not a property of the data, but rather of the relationship itself. A list of authors and their books may happen to describe books with only one author, in which case one row of the books table will refer to only one row of the authors table, but the relationship itself is not one-to-many, because books may have more than one author, forming a many-to-many relationship.

The opposite of one-to-many is many-to-one.

Many-to-many

a many-to-many relationship is a type of cardinality that refers to the relationship between two entities A and B in which A may contain a parent instance for which there are many children in B and vice versa.

For example, think of A as Authors, and B as Books. An Author can write several Books, and a Book can be written by several Authors.

In a relational database management system, such relationships are usually implemented by means of an associative table (also known as join table, junction table or cross-reference table), say, AB with two one-to-many relationships A -> AB and B -> AB. In this case the logical primary key for AB is formed from the two foreign keys (i.e. copies of the primary keys of A and B).

AWS Data Access – Amazon S3

Amazon S3 has a simple web services interface that you can use to store and retrieve any amount of data, at any time, from anywhere on the web. AWS Access control defines who can access objects and buckets within Amazon S3, and the type of AWS data access (e.g., READ and WRITE). The authentication process verifies the identity of a user who is trying to access Amazon Web Services (AWS).

Amazon S3 Concepts

Buckets – A bucket is a container for objects stored in Amazon S3. Every object is contained in a bucket. For example, if the object named photos/puppy.jpg is stored in the johnsmith bucket, then it is addressable using the URL http://johnsmith.s3.amazonaws.com/photos/puppy.jpg Buckets serve several purposes: they organize the Amazon S3 namespace at the highest level, they identify the account responsible for storage and data transfer charges, they play a role in AWS data access control, and they serve as the unit of aggregation for usage reporting. You can configure buckets so that they are created in a specific region. You can also configure a bucket so that every time an object is added to it, Amazon S3 generates a unique version ID and assigns it to the object.

The following are the rules for naming S3 buckets in all AWS Regions:

- Bucket names must be unique across all existing bucket names in Amazon S3.

- Bucket names must comply with DNS naming conventions.

- Bucket names must be at least 3 and no more than 63 characters long.

- Bucket names must not contain uppercase characters or underscores.

- Bucket names must start with a lowercase letter or number.

- Bucket names must be a series of one or more labels. Adjacent labels are separated by a single period (.). Bucket names can contain lowercase letters, numbers, and hyphens. Each label must start and end with a lowercase letter or a number.

- Bucket names must not be formatted as an IP address (for example, 192.168.5.4).

- When you use virtual hosted–style buckets with Secure Sockets Layer (SSL), the SSL wildcard certificate only matches buckets that don’t contain periods. To work around this, use HTTP or write your own certificate verification logic. We recommend that you do not use periods (“.”) in bucket names when using virtual hosted–style buckets.

Objects – Objects are the fundamental entities stored in Amazon S3. Objects consist of object data and metadata. The data portion is opaque to Amazon S3. The metadata is a set of name-value pairs that describe the object. These include some default metadata, such as the date last modified, and standard HTTP metadata, such as Content-Type. You can also specify custom metadata at the time the object is stored. An object is uniquely identified within a bucket by a key (name) and a version ID.

Keys – A key is the unique identifier for an object within a bucket. Every object in a bucket has exactly one key. Because the combination of a bucket, key, and version ID uniquely identify each object, Amazon S3 can be thought of as a basic data map between “bucket + key + version” and the object itself. Every object in Amazon S3 can be uniquely addressed through the combination of the web service endpoint, bucket name, key, and optionally, a version. For example, in the URL http://doc.s3.amazonaws.com/2006-03-01/AmazonS3.wsdl, “doc” is the name of the bucket and “2006-03-01/AmazonS3.wsdl” is the key.

Regions – You can choose the geographical region where Amazon S3 will store the buckets you create. You might choose a region to optimize latency, minimize costs, or address regulatory requirements. Objects stored in a region never leave the region unless you explicitly transfer them to another region. For example, objects stored in the EU (Ireland) region never leave it.

Amazon S3 Data Consistency Model – Amazon S3 provides read-after-write consistency for PUTS of new objects in your S3 bucket in all regions with one caveat. The caveat is that if you make a HEAD or GET request to the key name (to find if the object exists) before creating the object, Amazon S3 provides eventual consistency for read-after-write.

Amazon S3 offers eventual consistency for overwrite PUTS and DELETES in all regions.

Updates to a single key are atomic. For example, if you PUT to an existing key, a subsequent read might return the old data or the updated data, but it will never return corrupted or partial data.

Amazon S3 achieves high availability by replicating data across multiple servers within Amazon’s data centers. If a PUT request is successful, your data is safely stored. However, information about the changes must replicate across Amazon S3, which can take some time, and so you might observe the following behaviors:

- A process writes a new object to Amazon S3 and immediately lists keys within its bucket. Until the change is fully propagated, the object might not appear in the list.

- A process replaces an existing object and immediately attempts to read it. Until the change is fully propagated, Amazon S3 might return the prior data.

- A process deletes an existing object and immediately attempts to read it. Until the deletion is fully propagated, Amazon S3 might return the deleted data.

- A process deletes an existing object and immediately lists keys within its bucket. Until the deletion is fully propagated, Amazon S3 might list the deleted object.

The following table describes the characteristics of eventually consistent read and consistent read.

| Eventually Consistent Read | Consistent Read |

| Stale reads possible | No stale reads |

| Lowest read latency | Potential higher read latency |

| Highest read throughput | Potential lower read throughput |

Concurrent Applications

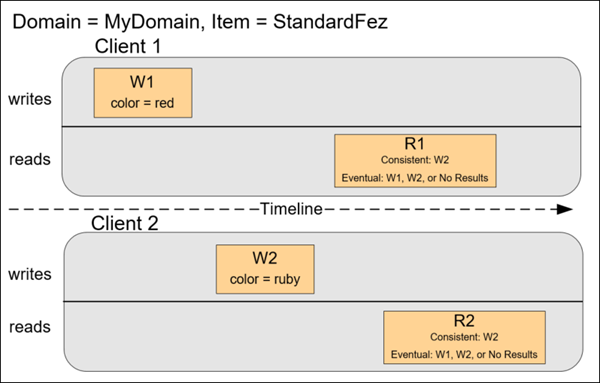

In this example, both W1 (write 1) and W2 (write 2) complete before the start of R1 (read 1) and R2 (read 2). For a consistent read, R1 and R2 both return color = ruby. For an eventually consistent read, R1 and R2 might return color = red or color = ruby depending on the amount of time that has elapsed.

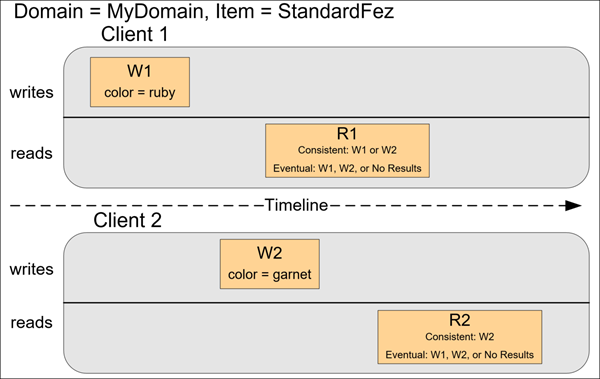

In the next example, W2 does not complete before the start of R1. Therefore, R1 might return color = ruby or color = garnet for either a consistent read or an eventually consistent read. Also, depending on the amount of time that has elapsed, an eventually consistent read might return no results.

For a consistent read, R2 returns color = garnet. For an eventually consistent read, R2 might return color = ruby or color = garnet depending on the amount of time that has elapsed.

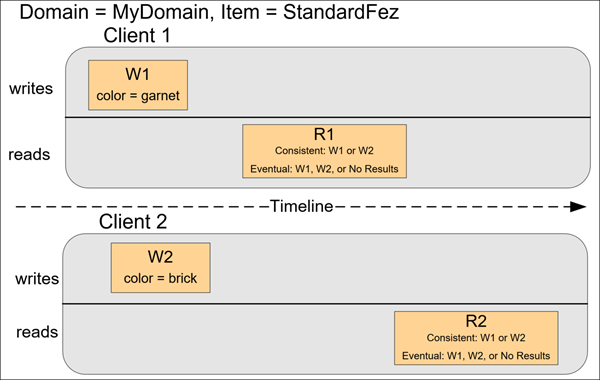

In the last example, Client 2 performs W2 before Amazon S3 returns a success for W1, so the outcome of the final value is unknown (color = garnet or color = brick). Any subsequent reads (consistent read or eventually consistent) might return either value. Also, depending on the amount of time that has elapsed, an eventually consistent read might return no results.

Bucket Policies

Bucket policies provide centralized AWS data access control to buckets and objects based on a variety of conditions, including Amazon S3 operations, requesters, resources, and aspects of the request (e.g., IP address). The policies are expressed in our AWS data access policy language and enable centralized management of permissions. The permissions attached to a bucket apply to all of the objects in that bucket.

Individuals as well as companies can use bucket policies. When companies register with Amazon S3 they create an account. Thereafter, the company becomes synonymous with the account. Accounts are financially responsible for the Amazon resources they (and their employees) create. Accounts have the power to grant bucket policy permissions and assign employees permissions based on a variety of conditions. For example, an account could create a policy that gives a user write access:

- To a particular S3 bucket

- From an account’s corporate network

- During business hours

An account can grant one user limited read and write access, but allow another to create and delete buckets as well. An account could allow several field offices to store their daily reports in a single bucket, allowing each office to write only to a certain set of names (e.g., “Nevada/*” or “Utah/*”) and only from the office’s IP address range.

Unlike AWS data access control lists, which can add (grant) permissions only on individual objects, policies can either add or deny permissions across all (or a subset) of objects within a bucket. With one request an account can set the permissions of any number of objects in a bucket. An account can use wildcards (similar to regular expression operators) on Amazon resource names (ARNs) and other values, so that an account can control access to groups of objects that begin with a common prefix or end with a given extension such as .html.

Only the bucket owner is allowed to associate a policy with a bucket. Policies, written in the AWS data access policy language, allow or deny requests based on:

- Amazon S3 bucket operations (such as PUT ?acl), and object operations (such as PUT Object, or GET Object)

- Requester

- Conditions specified in the policy

An account can control AWS data access based on specific Amazon S3 operations, such as GetObject, GetObjectVersion, DeleteObject, or DeleteBucket.

The conditions can be such things as IP addresses, IP address ranges in CIDR notation, dates, user agents, HTTP referrer and transports (HTTP and HTTPS).

Storage Tiers

Amazon S3 Storage Classes

The following table compares the storage classes.

| Storage Class | Designed for | Durability (designed for) | Availability (designed for) | Availability Zones | Min storage duration | Min billable object size | Other Considerations |

| STANDARD | Frequently accessed data | 99.999999999% | 99.99% | >= 3 | None | None | None |

| STANDARD_IA | Long-lived, infrequently accessed data | 99.999999999% | 99.9% | >= 3 | 30 days | 128 KB | Per GB retrieval fees apply. |

| INTELLIGENT_TIERING | Long-lived data with changing or unknown AWS data access patterns | 99.999999999% | 99.9% | >= 3 | 30 days | None | Monitoring and automation fees per object apply. No retrieval fees. |

| ONEZONE_IA | Long-lived, infrequently accessed, non-critical data | 99.999999999% | 99.5% | 1 | 30 days | 128 KB | Per GB retrieval fees apply. Not resilient to the loss of the Availability Zone. |

| GLACIER | Long-term data archiving with retrieval times ranging from minutes to hours | 99.999999999% | 99.99% (after you restore objects) | >= 3 | 90 days | None | Per GB retrieval fees apply. You must first restore archived objects before you can access them. For more information, see Restoring Archived Objects. |

| DEEP_ARCHIVE | Archiving rarely accessed data with a default retrieval time of 12 hours | 99.999999999% | 99.99% (after you restore objects) | >= 3 | 180 days | None | Per GB retrieval fees apply. You must first restore archived objects before you can access them. For more information, see Restoring Archived Objects. |

| RRS (Not recommended) | Frequently accessed, non-critical data | 99.99% | 99.99% | >= 3 | None | None | None |

All of the storage classes except for ONEZONE_IA are designed to be resilient to simultaneous complete data loss in a single Availability Zone and partial loss in another Availability Zone.

Operations

Following are the most common operations you’ll execute through the API.

Common Operations

- Create a Bucket – Create and name your own bucket in which to store your objects.

- Write an Object – Store data by creating or overwriting an object. When you write an object, you specify a unique key in the namespace of your bucket. This is also a good time to specify any AWS data access control you want on the object.

- Read an Object – Read data back. You can download the data via HTTP or BitTorrent.

- Deleting an Object – Delete some of your data.

- Listing Keys – List the keys contained in one of your buckets. You can filter the key list based on a prefix.

Encryption

Server-side encryption is about data encryption at rest—that is, Amazon S3 encrypts your data at the object level as it writes it to disks in its data centers and decrypts it for you when you access it. As long as you authenticate your request and you have AWS data access permissions, there is no difference in the way you access encrypted or unencrypted objects. For example, if you share your objects using a presigned URL, that URL works the same way for both encrypted and unencrypted objects.

You can’t apply different types of server-side encryption to the same object simultaneously.

You have three mutually exclusive options depending on how you choose to manage the encryption keys:

- Use Server-Side Encryption with Amazon S3-Managed Keys (SSE-S3) – Each object is encrypted with a unique key. As an additional safeguard, it encrypts the key itself with a master key that it regularly rotates. Amazon S3 server-side encryption uses one of the strongest block ciphers available, 256-bit Advanced Encryption Standard (AES-256), to encrypt your data.

- Use Server-Side Encryption with AWS KMS-Managed Keys (SSE-KMS) – Similar to SSE-S3, but with some additional benefits along with some additional charges for using this service. There are separate permissions for the use of an envelope key (that is, a key that protects your data’s encryption key) that provides added protection against unauthorized AWS data access of your objects in Amazon S3. SSE-KMS also provides you with an audit trail of when your key was used and by whom. Additionally, you have the option to create and manage encryption keys yourself, or use a default key that is unique to you, the service you’re using, and the Region you’re working in.

- Use Server-Side Encryption with Customer-Provided Keys (SSE-C) – You manage the encryption keys and Amazon S3 manages the encryption, as it writes to disks, and decryption, when you access your objects.

Client-side encryption – Client-side encryption is the act of encrypting data before sending it to Amazon S3. To enable client-side encryption, you have the following options:

- Use an AWS KMS-managed customer master key.

- Use a client-side master key.

The following AWS SDKs support client-side encryption:

- AWS SDK for .NET

- AWS SDK for Go

- AWS SDK for Java

- AWS SDK for PHP

- AWS SDK for Ruby

- AWS SDK for C++;

S3 Logging

To track requests for AWS data access to your bucket, you can enable server access logging. Each AWS data access log record provides details about a single access request, such as the requester, bucket name, request time, request action, response status, and an error code, if relevant.

To enable AWS data access logging, you must do the following:

- Turn on the log delivery by adding logging configuration on the bucket for which you want Amazon S3 to deliver access logs. We refer to this bucket as the source bucket.

- Grant the Amazon S3 Log Delivery group write permission on the bucket where you want the access logs saved. We refer to this bucket as the target bucket.

To turn on log delivery, you provide the following logging configuration information:

- The name of the target bucket where you want Amazon S3 to save the access logs as objects. You can have logs delivered to any bucket that you own that is in the same Region as the source bucket, including the source bucket itself.

- We recommend that you save access logs in a different bucket so that you can easily manage the logs. If you choose to save access logs in the source bucket, we recommend that you specify a prefix for all log object keys so that the object names begin with a common string and the log objects are easier to identify.

- When your source bucket and target bucket are the same bucket, additional logs are created for the logs that are written to the bucket. This behavior might not be ideal for your use case because it could result in a small increase in your storage billing. In addition, the extra logs about logs might make it harder to find the log that you’re looking for.

Amazon S3 uses the following object key format for the log objects it uploads in the target bucket:

TargetPrefixYYYY-mm-DD-HH-MM-SS-UniqueString

In the key, YYYY, mm, DD, HH, MM, and SS are the digits of the year, month, day, hour, minute, and seconds (respectively) when the log file was delivered. A log file delivered at a specific time can contain records written at any point before that time. There is no way to know whether all log records for a certain time interval have been delivered or not. The UniqueString component of the key is there to prevent overwriting of files. It has no meaning, and log processing software should ignore it.

AWS Data Access – Amazon S3 Glacier

Glacier is an extremely low-cost storage service that provides durable storage with security features for data archiving and backup. With Glacier, customers can store their data cost effectively for months, years, or even decades. Glacier enables customers to offload the administrative burdens of operating and scaling storage to AWS, so they don’t have to worry about capacity planning, hardware provisioning, data replication, hardware failure detection and recovery, or time-consuming hardware migrations.

Amazon S3 Glacier Data Model – The Amazon S3 Glacier (Glacier) data model core concepts include vaults and archives. Glacier is a REST-based web service. In terms of REST, vaults and archives are the resources. In addition, the Glacier data model includes job and notification-configuration resources. These resources complement the core resources.

Vault – In Glacier, a vault is a container for storing archives. When you create a vault, you specify a name and choose an AWS Region where you want to create the vault. Each vault resource has a unique address. The general form is:

https://<region-specific endpoint>/<account-id>/vaults/<vaultname>

You can store an unlimited number of archives in a vault. Depending on your business or application needs, you can store these archives in one vault or multiple vaults.

Glacier supports various vault operations. Note that vault operations are Region specific. For example, when you create a vault, you create it in a specific Region. When you request a vault list, you request it from a specific AWS Region, and the resulting list only includes vaults created in that specific Region.

Archive – An archive can be any data such as a photo, video, or document and is a base unit of storage in Glacier. Each archive has a unique ID and an optional description. Note that you can only specify the optional description during the upload of an archive. Glacier assigns the archive an ID, which is unique in the AWS Region in which it is stored. Each archive has a unique address. The general form is as follows:

https://<region-specific endpoint>/<account-id>/vaults/<vault-name>/archives/<archive-id>

Job – Glacier jobs can perform a select query on an archive, retrieve an archive, or get an inventory of a vault. When performing a query on an archive, you initiate a job providing a SQL query and list of Glacier archive objects. Glacier Select runs the query in place and writes the output results to Amazon S3.

Retrieving an archive and vault inventory (list of archives) are asynchronous operations in Glacier in which you first initiate a job, and then download the job output after Glacier completes the job.

Notification Configuration – Because jobs take time to complete, Glacier supports a notification mechanism to notify you when a job is complete. You can configure a vault to send notification to an Amazon Simple Notification Service (Amazon SNS) topic when jobs complete. You can specify one SNS topic per vault in the notification configuration. Glacier stores the notification configuration as a JSON document.

Amazon S3 Glacier (Glacier) supports a set of operations. Among all the supported operations, only the following operations are asynchronous:

- Retrieving an archive

- Retrieving a vault inventory (list of archives)

These operations require you to first initiate a job and then download the job output.

Archive Retrieval Options – You can specify one of the following when initiating a job to retrieve an archive based on your AWS data access time and cost requirements.

- Expedited — AWS data access has expedited retrievals which allow you to quickly access your data when occasional urgent requests for a subset of archives are required. For all but the largest archives (250 MB+), data accessed using Expedited retrievals are typically made available within 1–5 minutes. Provisioned Capacity ensures that retrieval capacity for Expedited retrievals is available when you need it.

- Standard — AWS data access under standard retrievals allow you to access any of your archives within several hours. Standard retrievals typically complete within 3–5 hours. This is the default option for retrieval requests that do not specify the retrieval option.

- Bulk — Bulk retrievals are Glacier’s lowest-cost retrieval option, which you can use to retrieve large amounts, even petabytes, of data inexpensively in a day. Bulk retrievals typically complete within 5–12 hours.

Amazon S3 Glacier Vault – Creating a vault adds a vault to the set of vaults in your account. An AWS account can create up to 1,000 vaults per region.

When you create a vault, you must provide a vault name. The following are the vault naming requirements:

- Names can be between 1 and 255 characters long.

- Allowed characters are a–z, A–Z, 0–9, ‘_’ (underscore), ‘-‘ (hyphen), and ‘.’ (period).

Vault names must be unique within an account and the region in which the vault is being created. That is, an account can create vaults with the same name in different regions but not in the same region.

You can retrieve vault information such as the vault creation date, number of archives in the vault, and the total size of all the archives in the vault. Amazon S3 Glacier (Glacier) provides API calls for you to retrieve this information for a specific vault or all the vaults in a specific region in your account.

If you retrieve a vault list, Glacier returns the list sorted by the ASCII values of the vault names. The list contains up to 1,000 vaults. You should always check the response for a marker at which to continue the list; if there are no more items the marker field is null. You can optionally limit the number of vaults returned in the response. If there are more vaults than are returned in the response, the result is paginated. You need to send additional requests to fetch the next set of vaults.

AWS Data Access – Amazon DynamoDB

Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. DynamoDB lets you offload the administrative burdens of operating and scaling a distributed database, so that you don’t have to worry about hardware provisioning, setup and configuration, replication, software patching, or cluster scaling. Also, DynamoDB offers encryption at rest, which eliminates the operational burden and complexity involved in protecting sensitive data.

With DynamoDB, you can create database tables that can store and retrieve any amount of data, and serve any level of request traffic. You can scale up or scale down your tables’ throughput capacity without downtime or performance degradation, and use the AWS Management Console to monitor resource utilization and performance metrics. Amazon DynamoDB provides on-demand backup capability. It allows you to create full backups of your tables for long-term retention and archival for regulatory compliance needs.

In DynamoDB, tables, items, and attributes are the core components that you work with. A table is a collection of items, and each item is a collection of attributes. DynamoDB uses primary keys to uniquely identify each item in a table and secondary indexes to provide more querying flexibility. You can use DynamoDB Streams to capture data modification events in DynamoDB tables.

DynamoDB components

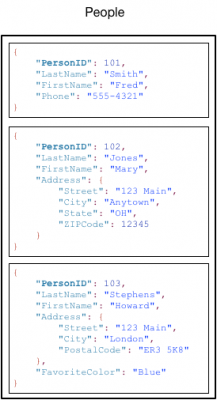

- Tables – Similar to other database systems, DynamoDB stores data in tables. A table is a collection of data. For example, see the example table called People that you could use to store personal contact information about friends, family, or anyone else of interest. You could also have a Cars table to store information about vehicles that people drive.

- Items – Each table contains zero or more items. An item is a group of attributes that is uniquely identifiable among all of the other items. In a People table, each item represents a person. For a Cars table, each item represents one vehicle. Items in DynamoDB are similar in many ways to rows, records, or tuples in other database systems. In DynamoDB, there is no limit to the number of items you can store in a table.

- Attributes – Each item is composed of one or more attributes. An attribute is a fundamental data element, something that does not need to be broken down any further. For example, an item in a People table contains attributes called PersonID, LastName, FirstName, and so on. For a Department table, an item might have attributes such as DepartmentID, Name, Manager, and so on. Attributes in DynamoDB are similar in many ways to fields or columns in other database systems.

The following diagram shows a table named People with some example items and attributes.

Note the following about the People table:

- Each item in the table has a unique identifier, or primary key, that distinguishes the item from all of the others in the table. In the People table, the primary key consists of one attribute (PersonID).

- Other than the primary key, the People table is schemaless, which means that neither the attributes nor their data types need to be defined beforehand. Each item can have its own distinct attributes.

- Most of the attributes are scalar, which means that they can have only one value. Strings and numbers are common examples of scalars.

- Some of the items have a nested attribute (Address). DynamoDB supports nested attributes up to 32 levels deep.

DynamoDB supports two different kinds of primary keys:

- Partition key – A simple primary key, composed of one attribute known as the partition key. DynamoDB uses the partition key’s value as input to an internal hash function. The output from the hash function determines the partition (physical storage internal to DynamoDB) in which the item will be stored. In a table that has only a partition key, no two items can have the same partition key value. The People table described in Tables, Items, and Attributes is an example of a table with a simple primary key (PersonID). You can access any item in the People table directly by providing the PersonId value for that item for AWS data access.

- Partition key and sort key – Referred to as a composite primary key, this type of key is composed of two attributes. The first attribute is the partition key, and the second attribute is the sort key. DynamoDB uses the partition key value as input to an internal hash function. The output from the hash function determines the partition (physical storage internal to DynamoDB) in which the item will be stored. All items with the same partition key value are stored together, in sorted order by sort key value.

Secondary Indexes – You can create one or more secondary indexes on a table. A secondary index lets you query the data in the table using an alternate key, in addition to queries against the primary key. DynamoDB doesn’t require that you use indexes, but they give your applications more flexibility when querying your data. After you create a secondary index on a table, you can read data from the index in much the same way as you do from the table.

DynamoDB supports two kinds of indexes:

- Global secondary index – An index with a partition key and sort key that can be different from those on the table.

- Local secondary index – An index that has the same partition key as the table, but a different sort key.

DynamoDB supports eventually consistent and strongly consistent reads.

- Eventually Consistent Reads – When you read data from a DynamoDB table, the response might not reflect the results of a recently completed write operation. The response might include some stale data. If you repeat your read request after a short time, the response should return the latest data.

- Strongly Consistent Reads – When you request a strongly consistent read, DynamoDB returns a response with the most up-to-date data, reflecting the updates from all prior write operations that were successful. A strongly consistent read might not be available if there is a network delay or outage. Consistent reads are not supported on global secondary indexes (GSI).

AWS Data Access – Amazon ElastiCache

Amazon ElastiCache makes it easy to set up, manage, and scale distributed in-memory cache environments in the AWS Cloud. It provides a high performance, resizable, and cost-effective in-memory cache, while removing complexity associated with deploying and managing a distributed cache environment. ElastiCache works with both the Redis and Memcached engines; to see which works best for you

AWS and Redis

Redis, which stands for Remote Dictionary Server, is a fast, open-source, in-memory key-value data store for use as a database, cache, message broker, and queue. The project started when Salvatore Sanfilippo, the original developer of Redis, was trying to improve the scalability of his Italian startup. Redis now delivers sub-millisecond response times enabling millions of requests per second for real-time applications in Gaming, Ad-Tech, Financial Services, Healthcare, and IoT. Redis is a popular choice for caching, session management, gaming, leaderboards, real-time analytics, geospatial, ride-hailing, chat/messaging, media streaming, and pub/sub apps.

All Redis data resides in-memory, in contrast to databases that store data on disk or SSDs. By eliminating the need to access disks, in-memory data stores such as Redis avoid seek time delays and can access data in microseconds. Redis features versatile data structures, high availability, geospatial, Lua scripting, transactions, on-disk persistence, and cluster support making it simpler to build real-time internet scale apps.

Redis has a vast variety of data structures to meet your application needs. Redis data types include:

- Strings – text or binary data up to 512MB in size

- Lists – a collection of Strings in the order they were added

- Sets – an unordered collection of strings with the ability to intersect, union, and diff other Set types

- Sorted Sets – Sets ordered by a value

- Hashes – a data structure for storing a list of fields and values

- Bitmaps – a data type that offers bit level operations

- HyperLogLogs – a probabilistic data structure to estimate the unique items in a data set

A node is the smallest building block of an ElastiCache deployment. A node can exist in isolation from or in some relationship to other nodes.

A node is a fixed-size chunk of secure, network-attached RAM. Each node runs an instance of the engine and version that was chosen when you created your cluster. If necessary, you can scale the nodes in a cluster up or down to a different instance type.

A Redis shard (called a node group in the API and CLI) is a grouping of one to six related nodes. A Redis (cluster mode disabled) cluster always has one shard. A Redis (cluster mode enabled) cluster can have 1–90 shards. A multiple node shard implements replication by have one read/write primary node and 1–5 replica nodes. A Redis cluster is a logical grouping of one or more ElastiCache for Redis Shards. Data is partitioned across the shards in a Redis (cluster mode enabled) cluster.

Many ElastiCache operations are targeted at clusters:

- Creating a cluster

- Modifying a cluster

- Taking snapshots of a cluster (all versions of Redis)

- Deleting a cluster

- Viewing the elements in a cluster

- Adding or removing cost allocation tags to and from a cluster

AWS and Memcached

ElastiCache is a web service that makes it easy to set up, manage, and scale a distributed in-memory data store or cache environment in the cloud. It provides a high-performance, scalable, and cost-effective caching solution, while removing the complexity associated with deploying and managing a distributed cache environment.

A node is the smallest building block of an ElastiCache deployment. A node can exist in isolation from or in some relationship to other nodes.

A node is a fixed-size chunk of secure, network-attached RAM. Each node runs an instance of Memcached. If necessary, you can scale the nodes in a cluster up or down to a different instance type.

The Memcached engine supports Auto Discovery. Auto Discovery is the ability for client programs to automatically identify all of the nodes in a cache cluster, and to initiate and maintain connections to all of these nodes. With Auto Discovery, your application doesn’t need to manually connect to individual nodes. Instead, your application connects to a configuration endpoint. The configuration endpoint DNS entry contains the CNAME entries for each of the cache node endpoints.

Amazon ElastiCache for Memcached is available in multiple AWS Regions around the world. Thus, you can launch ElastiCache clusters in the locations that meet your business requirements. For example, you can launch in the AWS Region closest to your customers or to meet certain legal requirements.

By default, the AWS SDKs, AWS CLI, ElastiCache API, and ElastiCache console reference the US-West (Oregon) region. As ElastiCache expands availability to new AWS Regions, new endpoints for these AWS Regions are also available to use in your HTTP requests, the AWS SDKs, AWS CLI, and ElastiCache console.

Choose Memcached if the following apply for you:

- You need the simplest model possible.

- You need to run large nodes with multiple cores or threads.

- You need the ability to scale out and in, adding and removing nodes as demand on your system increases and decreases.

- You need to cache objects, such as a database.

Free Practice Test on AWS data access – https://www.testpreptraining.com/aws-certified-big-data-specialty-free-practice-test