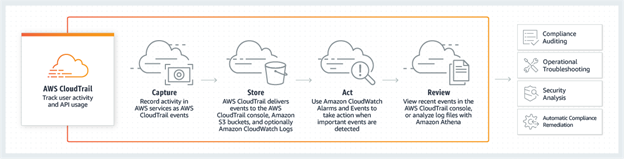

- It is a web service that records API activity in AWS account.

- It is enabled on AWS account when created.

- All activity occurring in AWS account, is recorded in a CloudTrail event.

- User can search, download activity of past 90 days from the event history view

- It logs information on

- who made a request

- the services used

- the actions performed

- parameters for the actions

- the response elements returned by the AWS service.

- Stores Logs in specific log group.

- Logs provide specific information on what occurred in AWS account.

- focuses more on AWS API calls made in AWS account.

- helps in meeting compliance and regulatory standards.

- Usually delivers an event within 15 minutes of the API call.

- It helps you enable governance, compliance, and operational and risk auditing.

- CloudTrail records all actions done by a user/role/ AWS service

- Events are recorded by CloudTrail, for actions in

- AWS Management Console

- AWS Command Line Interface

- AWS SDKs and APIs.

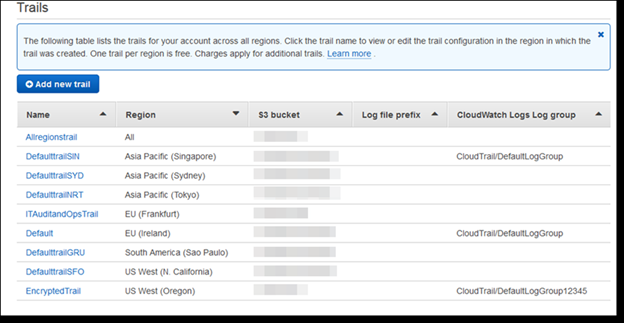

- Trail is a configuration which delivers event details to specified S3 bucket

- The trail is useful for storing, analysis and any changes for AWS resources.

- create a trail with

- CloudTrail console

- AWS CLI

- CloudTrail API

- Types of trails

- A trail that applies to all regions – records events in each region. Default with console

- A trail that applies to one region – records the events in that region only. Default option with AWS CLI or CloudTrail API.

CloudTrail Events

Data Events

Data events gives details of all operations done on a AWS resource hence, also called as data plane operations. They are high-volume activities.

Example data events include:

Amazon S3 object-level API activity (for example, GetObject, DeleteObject, and PutObject API operations)

AWS Lambda function execution activity (the Invoke API)

During trail creation, by default data events are disabled. For recording data events, add supported resources or resource types to collect activity to a trail.

Management Events

Management events provide insight into management operations that are performed on resources in AWS account. These are also known as control plane operations. Example management events include:

- Configuration of security (like, IAM AttachRolePolicy API operations)

- Registering devices (like, Amazon EC2 CreateDefaultVpc API operations)

- Configuring rules for routing data (like, Amazon EC2 CreateSubnet API operations)

- Setting up logging (like, AWS CloudTrail CreateTrail API operations)

Management events can also include non-API events that occur in account. For example, when a user logs in to account, CloudTrail logs the ConsoleLogin event.

Read-only and Write-only Events

When you configure trail to log data and management events, you can specify whether you want read-only events, write-only events, both, or none.

- Read-only – Read-only events include API operations that read resources, but don’t make changes. For example, read-only events include the Amazon EC2 DescribeSecurityGroups and DescribeSubnets API operations. These operations return only information about Amazon EC2 resources and don’t change configurations.

- Write-only – Write-only events include API operations that modify (or might modify) resources. For example, the Amazon EC2 RunInstances and TerminateInstances API operations modify instances.

- All – trail logs both.

None – trail logs neither read-only nor write-only management events.

CloudTrail Logs

- Monitor existing system, application and custom logs in real time.

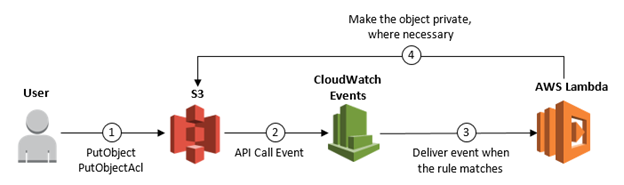

- Send existing logs to CloudWatch; Create patterns to look for in logs; Alert based on finding of these patterns.

- Free agents for Ubuntu, Amazon Linux, Windows.

- Purpose

- Monitor logs from EC2 instances in realtime. (track number of errors in application logs and send notification if exceed thresold)

- To monitor logged events of CloudTrail (it logs, API Activity like manual EC2 instance termination)

- Archive log data (change log retention setting to automatically delete)

- Log events are a record which is given to AWS CloudWatch Logs for storage. Timestamping is also done and along with Message, it is stored.

- Log Streams – Refers to the log events sequence sharing same resource (like for Apache access logs, they are automatically deleted after every 2 months).

- Log Groups – Refer to log stream group sharing

same settings for

- Retention

- monitoring

- access control

- CMetric Filters – define how a service would extract metric observations from events and turn them into data points for a CloudWatch metric.

- Retention Settings – Settings for duration to keep events. Automatic deletion of expired logs.

- The duration offered for Log Group Retention ranges from 1 day to 10 years.

- CloudWatch Log Filters: filter log data pushed to CloudWatch; won’t work on existing log data, only work after log filter created, only returns

- first 50 results. Metric contains 1. Filter Pattern 2. Metric Name 3. Metric NameSpace 4. Metric value

- Modify rsyslog (/etc/rsyslog.d/50-default.conf) and remove auth on line number 9, sudo service rsyslog restart

- Real-Time Log processing: It needs subscription Filters and applicable for AWS Kinesis Streams, AWS Lambda and AWS Kinesis Firehouse

- aws kinesis command is used for creation/ describing stream. Command can also list the stream ARN. Them update the permissions.json file with ARN’s of the stream and role.

Advanced tasks with CloudTrail log files

- Create multiple trails per region.

- CloudWatch Logs are used to monitor CloudTrail log files

- Share log files between accounts.

- Log processing applications can be developed in Java by using CloudTrail Processing Library.

- Validate log files to verify that they have not changed after delivery by CloudTrail.

To receive CloudTrail log files from multiple regions

- Sign in to the AWS Management Console and open the CloudTrail console at https://console.aws.amazon.com/cloudtrail/.

- Choose the option – “Trails”, and then select a trail name.

- Next, click on pencil icon adjacent to “Apply trail to all regions”, and then select “Yes”.

- Choose Save. The original trail will be replicated across all AWS regions. CloudTrail will deliver log files present in all regions to S3 bucket.

Validating CloudTrail Log File Integrity

- Use CloudTrail log file integrity validation.

- The feature using SHA-256 for hashing and SHA-256 with RSA for digital signing.

- Thus making CloudTrail log files without

detection, computationally infeasible to

- Modify

- delete

- forge

- Use CLI to validate files

- With log file integrity validation, CloudTrail creates hash for every log file

- Every hour, CloudTrail also creates a file (called a digest file) that references log files for last hour and has hash of each.

- Each digest file is signed using private key of a public and private key pair.

- After delivery, use public key to validate the digest file.

- Every AWS region has different key pairs in CloudTrail.

- The digest files are delivered to S3 bucket associated with trail as CloudTrail log files.

- The digest files are put into a folder separate from the log files.

- Every digest file has digital signature of previous digest file if present.

- The signature for current digest file is in the metadata properties of digest file S3 object.

Sharing CloudTrail Log Files Between AWS Accounts

The steps are

- To share log file with an account, create IAM role for it.

- For each of these IAM roles, create an access policy that grants read-only access to the account you want to share the log files with.

- IAM user can take the required role to retrieve log files, programmatically.

CloudTrail Processing Library

- A Java library to process AWS CloudTrail logs

- Details about CloudTrail SQS queue and code to process events, is to be provided

- CloudTrail Processing Library will

- polls SQS queue

- reads and parses queue messages

- downloads CloudTrail log files

- parses events in the log files

- passes events to code as Java objects.

- It is scalable and fault-tolerant.

- Handles parallel processing of log files

- Manages network failures like network timeouts or inaccessible resources.