CloudFront Performance

- Measuring CDN performance is done by

- Real User Monitoring (RUM)

- Synthetic monitoring.

- RUM process of measuring performance while real end users are interacting with a web application.

- Synthetic monitoring, requests are proactively sent by external agents configured to mimic actual web traffic.

- For RUM

- Throughput and latency metrics can measure downloads of the exact object(s) served by an application.

- It measures actual workload and gives most accurate view of what end users will experience.

- For

availability, configurations custom to application such as

- request retries

- connection/response timeouts

- different error codes (HTTP 4xx/5xx).

- Some applications may consider retries acceptable, while others may never attempt to retry.

- For Synthetic monitoring

- simulate web traffic from different geographies

- two forms of testing

- Backbone

- providers use nodes installed in colocated data centers around the world.

- These servers have direct fiber connectivity to various network providers (both transit providers and internet service providers) like Verizon, NTT, ATT.

- Last mile

- providers install agents in computers of real end users

- deploy small test nodes connected to routers of real end users in their home network.

- Nodes give perspective performance for end users by their ISPs

- Backbone

- Best Practices

- use RUM over synthetic monitoring

- Understand your workload before configuring synthetic tests

- Match your demographic

- Be aware of timeouts

- Test over long periods

- Deep dive to understand anomalies.

- Recommended to use

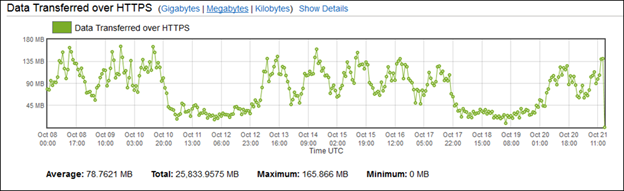

- AWS Billing and Usage Reports for CloudFront

- CloudFront Reports in the Console

- Tracking Configuration Changes with AWS Config

- Configuring and Using Access Logs

- Using AWS CloudTrail to Capture Requests Sent to the CloudFront API

- Monitoring CloudFront and Setting Alarms

- Tagging Amazon CloudFront Distributions

AWS Certified Solutions Architect Associate Free Practice TestTake a Quiz