Define Methods of Deploying and Operating in the AWS Cloud

Multiple options for provisioning your IT infrastructure and the deployment of your applications. The main principles to remember are AAA – Automate, Automate, Automate.

AWS Elastic Beanstalk

- It is a high-level deployment tool

- Helps you get an app from your desktop to the web in a matter of minutes.

- handles the details of your hosting environment

for

- capacity provisioning

- load balancing

- scaling

- application health monitoring

- A platform configuration defines the infrastructure and software stack to be used for a given environment.

- When you deploy your app, Elastic Beanstalk provisions a set of AWS resources

- AWS resources can include Amazon EC2 instances, alarms, a load balancer, security groups, and more.

Using AWS Beanstalk

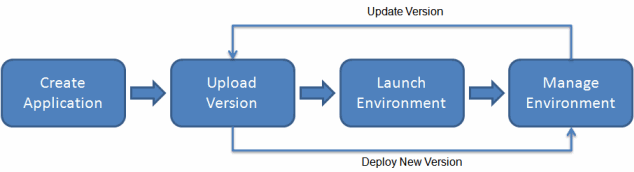

- First create an application

- upload an application version in the form of an application source bundle (for example, a Java .war file) to Elastic Beanstalk

- then, provide some information about the application.

- Elastic Beanstalk automatically launches an environment and creates and configures the AWS resources needed to run your code.

- After your environment is launched, you can then manage your environment and deploy new application versions.

The following diagram illustrates the workflow of Elastic Beanstalk.

After creating and deploying your application, information about the application—including metrics, events, and environment status, is available through

- the AWS Management Console, APIs

- Command Line Interfaces and

- unified AWS CLI

AWS CloudFormation

- It is a service to model and set up your Amazon Web Services resources

- Spend less time managing those resources

- More time focusing on your applications that run in AWS.

- Create a template that describes all the AWS resources that you want (like Amazon EC2 instances or RDS)

- CloudFormation takes care of provisioning and configuring those resources for you.

- Use a simple text file to model and provision, in an automated and secure manner, all the resources needed for your applications across all regions and accounts.

- This file serves as the single source of truth for your cloud environment.

- Available at no additional charge, and you pay only for the AWS resources needed to run your applications.

AWS CloudFormation Working

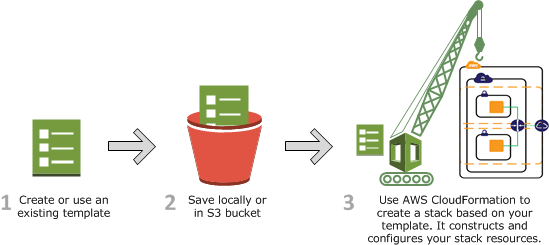

- Design an AWS CloudFormation template (a JSON or YAML-formatted document) in AWS CloudFormation Designer or write one in a text editor or choose to use a provided template. The template describes the resources you want and their settings.

- Save the template locally or in an S3 bucket. If you created a template, save it with any file extension like .json, .yaml, or .txt.

- Create an AWS CloudFormation stack by specifying

the location of your template file , such as a path on your local computer or

an Amazon S3 URL.

- If the template contains parameters, you can specify input values when you create the stack. Parameters enable you to pass in values to your template so that you can customize your resources each time you create a stack.

AWS OpsWorks

- It is a configuration management service

- Helps you configure and operate applications in a cloud enterprise by using Chef.

- There are 2 variants: AWS OpsWorks Stacks and AWS OpsWorks for Chef Automate.

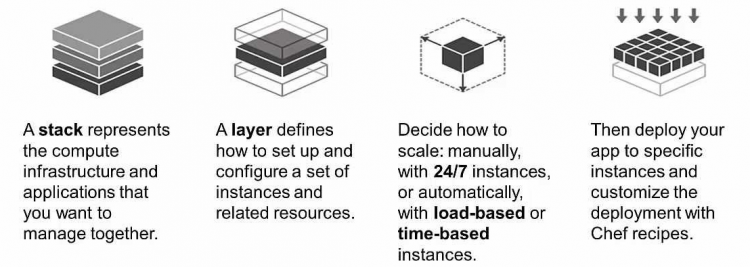

AWS OpsWorks Stacks

- It provides a simple and flexible way to create and manage stacks and applications.

- Deploy and monitor applications in your stacks.

- It does not require or create Chef servers;

- Performs some of the work of a Chef server for you.

- Monitors instance health, and provisions new instances for you, when necessary, by using Auto Healing and Auto Scaling.

AWS OpsWorks for Chef Automate

- Create AWS-managed Chef servers that include Chef Automate premium features

- Use the Chef DK and other Chef tooling to manage them

- Manages both Chef Automate Server and Chef Server software on a single instance.

AWS CodeCommit

- It is a fully-managed source control service

- Makes it easy for companies to host secure and highly scalable private Git repositories.

- It integrates with AWS CodePipeline and AWS CodeDeploy to streamline your development and release process.

- It is a secure, highly scalable, managed source control service that hosts private Git repositories.

Working

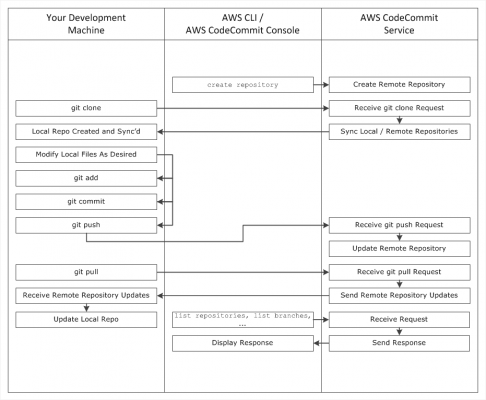

- Use the AWS CLI or the CodeCommit console to create a CodeCommit repository.

- From your development machine, use Git to run git clone, specifying the name of the CodeCommit repository. This creates a local repo that connects to the CodeCommit repository.

- Use the local repo on your development machine to modify (add, edit, and delete) files, and then run git add to stage the modified files locally. Run git commit to commit the files locally, and then run git push to send the files to the CodeCommit repository.

- Download changes from other users. Run git pull to synchronize the files in the CodeCommit repository with your local repo. This ensures you’re working with the latest version of the files.

AWS CodeDeploy

- It is a service that automates code deployments and software deployments to any instance, including Amazon EC2 instances and instances running on-premises.

- Makes it easier for you to rapidly release new features

- Helps you avoid downtime during application deployment

- Handles the complexity of updating your applications.

CodeDeploy Deployment Types

CodeDeploy provides two deployment type options:

- In-place deployment: The application on each instance in the deployment group is stopped, the latest application revision is installed, and the new version of the application is started and validated.

- Blue/green deployment

- Blue/green on an EC2/On-Premises compute platform: The instances in a deployment group (the original environment) are replaced by a different set of instances (the replacement environment)

- Blue/green on an AWS Lambda compute platform: Traffic is shifted from your current serverless environment to one with your updated Lambda function versions.

- Blue/green on an Amazon ECS compute platform: Traffic is shifted from the task set with the original version of a containerized application in an Amazon ECS service to a replacement task set in the same service.

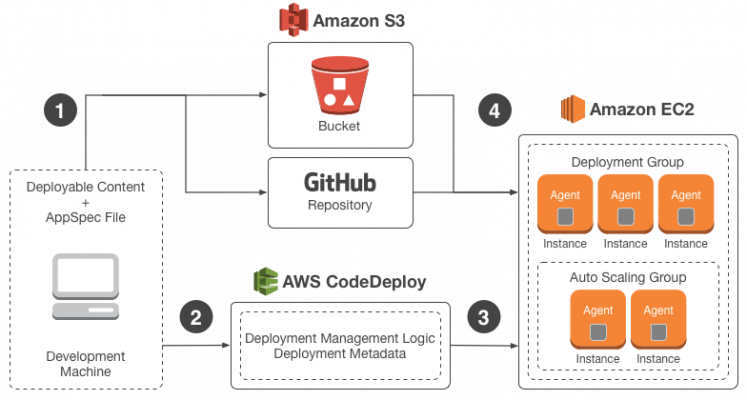

Sample CodeDeploy in-place deployment

- First, you create deployable content on your local development machine or similar environment, and then you add an application specification file (AppSpec file). The AppSpec file is unique to CodeDeploy. It defines the deployment actions you want CodeDeploy to execute. You bundle your deployable content and the AppSpec file into an archive file, and then upload it to an Amazon S3 bucket or a GitHub repository. This archive file is called an application revision (or simply a revision).

- Next, you provide CodeDeploy with information about your deployment, such as which Amazon S3 bucket or GitHub repository to pull the revision from and to which set of Amazon EC2 instances to deploy its contents. CodeDeploy calls a set of Amazon EC2 instances a deployment group. A deployment group contains individually tagged Amazon EC2 instances, Amazon EC2 instances in Amazon EC2 Auto Scaling groups, or both.

- Each time you successfully upload a new application revision that you want to deploy to the deployment group, that bundle is set as the target revision for the deployment group. In other words, the application revision that is currently targeted for deployment is the target revision. This is also the revision that is pulled for automatic deployments.

- Next, the CodeDeploy agent on each instance polls CodeDeploy to determine what and when to pull from the specified Amazon S3 bucket or GitHub repository.

- Finally, the CodeDeploy agent on each instance pulls the target revision from the Amazon S3 bucket or GitHub repository and, using the instructions in the AppSpec file, deploys the contents to the instance.

AWS CodePipeline

- It is a continuous integration and continuous delivery service

- It is used for fast and reliable application and infrastructure updates.

- It builds, tests, and deploys your code every time there is a code change, based on the release process models you define.

- It automates the steps required to release your software changes continuously.

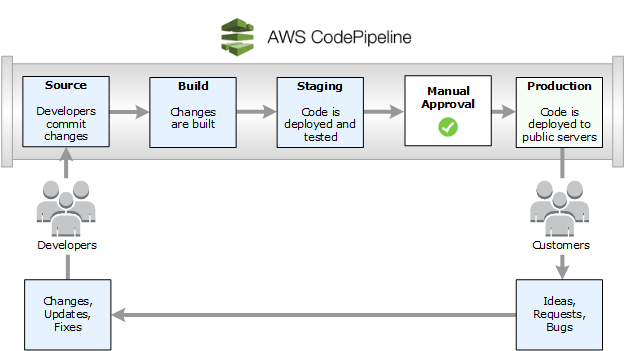

Example release process using CodePipeline

In this example,

- When developers commit changes to a source repository, CodePipeline automatically detects the changes.

- Those changes are built, and if any tests are configured, those tests are run.

- After the tests are complete, the built code is deployed to staging servers for testing.

- From the staging server, CodePipeline runs additional tests, such as integration or load tests.

- Upon the successful completion of those tests, and after a manual approval action that was added to the pipeline is approved

- CodePipeline deploys the tested and approved code to production instances.

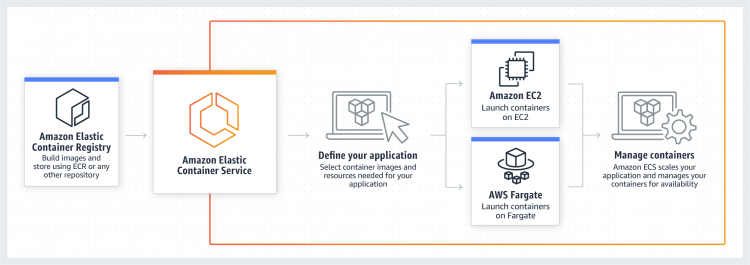

Amazon EC2 Container Service

- It is a highly scalable, high performance container management service that supports Docker containers

- Allows you to easily run applications on a managed cluster of Amazon EC2 instances.

- Eliminates the need for you to install, operate, and scale your own cluster management infrastructure.

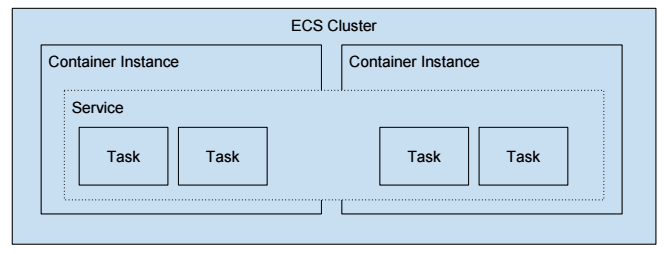

ECS Terms

- Task Definition — This a blueprint that describes how a docker container should launch. If you are already familiar with AWS, it is like a LaunchConfig except instead it is for a docker container instead of a instance. It contains settings like exposed port, docker image, cpu shares, memory requirement, command to run and environmental variables.

- Task — This is a running container with the settings defined in the Task Definition. It can be thought of as an “instance” of a Task Definition.

- Service — Defines long running tasks of the same Task Definition. This can be 1 running container or multiple running containers all using the same Task Definition.

- Cluster — A logic group of EC2 instances. When an instance launches the ecs-agent software on the server registers the instance to an ECS Cluster. This is easily configurable by setting the ECS_CLUSTER variable in /etc/ecs/ecs.config described here.

- Container Instance — This is just an EC2 instance that is part of an ECS Cluster and has docker and the ecs-agent running on it.

- Container agent — This is the agent that runs on EC2 instances to form the ECS cluster. If you’re using the ECS optimized AMI, you don’t need to do anything as the agent comes with it.

- Task definition — An application containing one or more containers. This is where you provide the Docker images, the amount of CPU/Memory to use, ports etc. You can also link containers here, similar to a Docker command line.

- Service — A service in ECS allows you to run and maintain a specified number of instances of a task definition. If a task in a service stops, the task is restarted. Services ensure that the desired running tasks are achieved and maintained. Services can also include things like load balancer configuration, IAM roles and placement strategies.

- Service auto-scaling — This is similar to the EC2 auto scaling concept but applies to the number of containers you’re running for each service. The ECS service scheduler respects the desired count at all times. Additionally, a scaling policy can be configured to trigger a scale-out based on alarms.

Amazon ECS Application

- Microservices – Amazon ECS helps you run microservices applications with native integration to AWS services and enables continuous integration and continuous deployment (CICD) pipelines.

- Batch processing – Amazon ECS lets you run batch workloads with managed or custom schedulers on Amazon EC2 On-Demand Instances, Reserved Instances, or Spot Instances.

- Application migration to the cloud – Legacy enterprise applications can be containerized and easily migrated to Amazon ECS without requiring code changes.

- Machine learning – Amazon ECS makes it easy to containerize ML models for both training and inference. You can create ML models made up of loosely coupled, distributed services that can be placed on any number of platforms, or close to the data that the applications are analyzing.

Amazon ECS Working

Non-AWS Solutions

Infrastructure as Code

- Terraform

- Salt Stack.

Configuration Management

- Chef

- Puppet

Continuous Integration

- Jenkins

- TeamCity

Hosted Version Control Repositories

- GitHub

- GitLab

General Principles

- Provision infrastructure from code

- Deploy artefacts automatically from version control

- Configuration managed from code and applied automatically

- Scale your infrastructure automatically

- Monitor every aspect of the pipeline and the infrastructure (CloudWatch)

- Logging for every action (CloudWatch Logs and CloudTrail)

- Instance profiles for embedding IAM roles to instances automatically

- Use variables, don’t hard code values

- Tagging can be used with automation to provide more insights about what has been provisioned

Updating Your Stack

There are many ways to update your stack.

- You can update your AMIs and then deploy a new environment from them.

- You can use CI tools to deploy the code to existing environments.

- You can use the “blue/green” method to have one “Production code” environment (blue) and one environment for the next version (green). When it is time to upgrade the traffic is simply switched from the blue stack to the green stack.

Link for free practice test – https://www.testpreptraining.com/aws-certified-cloud-practitioner-free-practice-test