Verifying the levels and storage locations for logs

AZ-304 exam is retired. AZ-305 replacement is available.

In this, we will learn and understand about the process of verifying the logs and how the logs are stored. So, let’s start learning about this.

Verifying logs

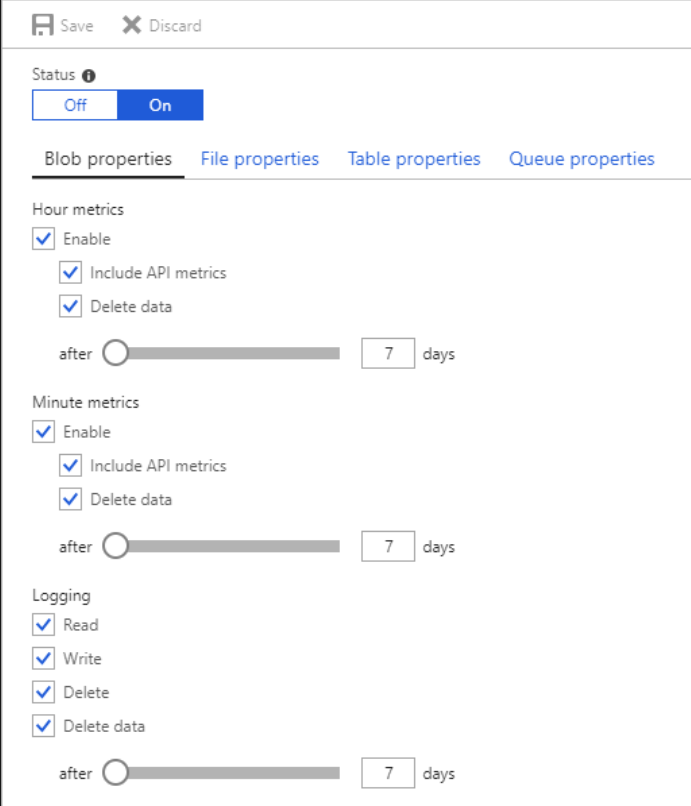

In this, you can command Azure Storage to save diagnostics logs for reading, writing, and deleting requests. This works for the blob, table, and queue services. Moreover, the data retention policy you set also applies to these logs.

Configuring log process

- Firstly, in the Azure portal, select Storage accounts. Then select the name of the storage account to open the storage account blade.

- After that, select Diagnostics settings (classic) in the Monitoring (classic) section of the menu blade.

- Then, make sure that the Status is On. And, select the services for which you’d like to enable logging.

- Lastly, click Save.

The diagnostics logs are saved in a blob container with name $logs in your storage account. However, you can view the log data using Microsoft Storage Explorer, or using the storage client library or PowerShell.

Process of Storing Logs

- All logs storage is in block blobs in a container that is $logs. However, it creates when storage analytics is set on for a storage account. The $logs container is located in the blob namespace of the storage account.

- You should know that you cannot delete the container after enabling the Storage Analytics. But its contents can be deleted. However, if you use your storage-browsing tool to navigate to the container directly, you will see all the blobs that contain your logging data.

- After logging the requests, Storage Analytics will upload intermediate results as blocks. Similarly, Storage Analytics will commit these blocks and make them available as a blob. But, it can take up to an hour for log data to appear in the blobs in the $logs container because the frequency at which the storage service flushes the log writers.

- However, if you have a high volume of log data with multiple files for each hour, then you can use the blob metadata. This is for determining what data the log contains by examining the blob metadata fields. Moreover, it is useful for delays handling during the process in which data is written to the log files.

Reference: Microsoft Documentation

Are you preparing for Microsoft Azure Architect Design AZ-304 exam?Take a Quiz