Ingest Google Professional Data Engineer GCP

- Capture raw data depending on the data’s size, source, and latency

- Various ingest sources

- App: Data from app events, like log files or user events

- Streaming: A continuous stream of small, asynchronous messages.

- Batch: Large amounts of data in set of files to transfer to storage in bulk.

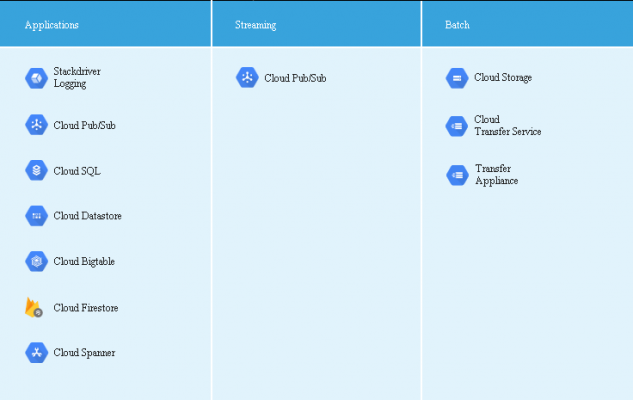

Google Cloud services map for app/streaming and batch workloads –

The data transfer model you choose depends on workload, and each model has different infrastructure requirements.

Ingesting app data

- Consists of apps and services data and includes

- app event logs

- clickstream data

- social network interactions

- e-commerce transactions

- App data helps in showing user trends and gives business insights

- GCP hosts apps from App Engine (managed platform) and Google Kubernetes Engine (GKE – container management).

- Use cases of GCP hosted apps

- Writing data to a file: App outputs batch CSV files to the object store of Cloud Storage then to import function of BigQuery, an data warehouse, for analysis and querying.

- Writing data to a database: App writes data to GCP database service

- Streaming data as messages: App streams data to Pub/Sub and other app, subscribed to the messages, can transfer the data to storage or process it immediately in situations such as fraud detection.

Cloud Logging

- A centralized log management service

- Collects log data from apps running on GCP.

- Export data collected by Cloud Logging and send the data to Cloud Storage, Pub/Sub, and BigQuery.

- Many GCP services automatically record log data to Cloud Logging like App Engine

- Also provide custom logging messages to stdout and stderr

- displays data in the Logs Viewer.

- Involves a logging agent, based on fluentd, which run on VM instances

- Agent streams log data

Ingesting streaming data

- Streaming data is

- delivered asynchronously

- without expecting a reply

- are small in size

- Streaming data can

- fire event triggers

- perform complex session analysis

- be input for ML tasks.

- Streaming Data Use cases

- Telemetry data: Data from network-connected Internet of Things (IoT) devices who gather data about surrounding environment by sensors.

- User events and analytics: Mobile app logging events about app usage, crash, etc

Pub/Sub

- A real-time messaging service

- sends and receives messages between apps

- A use cases is inter-app messaging to ingest streaming event data.

- Pub/Sub automatically manages

- Sharding

- replication

- load-balancing

- partitioning of the incoming data streams.

- Pub/Sub has global endpoints using GCP load balancer, with minimal latency.

- Automatic scaling to meet demand, without pre-provisioning the system resources.

- Message streams re organized as topics.

- Streaming data target a topic

- each message has unique identifier and timestamp.

- After data ingestion, apps can retrieve messages by using a topic subscription in a pull or push model.

- In a push subscription, server sends a request to the subscriber app at a preconfigured URL endpoint.

- In the pull model, the subscriber requests messages from the server and acknowledges receipt.

- Pub/Sub guarantees message delivery at least once per subscriber.

- No guarantees about the order of message delivery.

- For strict message ordering with buffering, use Dataflow for real-time processing

- After processing, move the data into Datastore/BigQuery.

Ingesting bulk data

- Bulk data is

- large datasets

- ingestion needs high aggregate bandwidth between a small sources and the target.

- Data can be

- files (CSV, JSON, Avro, or Parquet files) or in

- a relational database

- NoSQL database

- Source data can be on-premises or on other cloud platforms.

- Use cases

- Scientific workloads

- Migrating to the cloud

- Backing up data or Replication

- Importing legacy data

Storage Transfer Service

- Managed file transfer to a Cloud Storage bucket

- Data source can be

- AWS S3 bucket

- a web-accessible URL

- another Cloud Storage bucket.

- Used for bulk transfer

- Optimized for 1 TB or more data volumes.

- Usually used for backing up data to archive storage bucket

- Supports one-time transfers or recurring transfers.

- Has advanced filters based on file creation dates/filename/times of day

- Supports the deletion of the source data after it’s been copied.

Transfer Appliance:

- A shippable, high-capacity storage server

- It is leased from Google.

- connect it to network, load data and ship to an upload facility.

- Appliance comes in multiple sizes

- Use appliance a per cost and time feasibility for same

- Appliance deduplicates, compresses, and encrypts captured data with strong AES-256 encryption using a password and passphrase given by user. During reading of data from Cloud Storage, same password and passphrase are needed.

gsutil

- A command-line utility

- moves file-based data from any existing file system into Cloud Storage.

- Written in Python and runs on Linux, macOS and Windows.

- It can also

- create and manage Cloud Storage buckets

- edit access rights of objects

- copy objects from Cloud Storage.

Database migration

- For RDBMS data, can migrate to Cloud SQL and Cloud Spanner.

- For Data warehouses data, migrate to

- For NoSQL databases migrate to Bigtable (for column-oriented NoSQL) and Datastore (for JSON-oriented NoSQL).

Google Professional Data Engineer (GCP) Free Practice TestTake a Quiz