Cloud Composer Overview: Google Professional Data Engineer GCP

In this, we will get Cloud Composer Overview.

Cloud Composer Overview:

- Is a managed workflow orchestration service that is built on Airflow

- deploys multiple components to run Airflow.

- Composer relies on certain configurations to successfully execute workflows.

- Altering configurations can have unintended consequences or break Airflow deployment.

Environments

- Airflow is a micro-service architected framework.

- To deploy Airflow, provision many GCP components, called Cloud Composer environment.

- Can create one or more Cloud Composer environments inside of a project.

- Environments are self-contained Airflow deployments based on GKE.

- environments work with Google Cloud services.

- create Cloud Composer environments in supported regions

- environments run within a Compute Engine zone.

- Airflow communicates with other Google Cloud products through the products’ public APIs.

Architecture

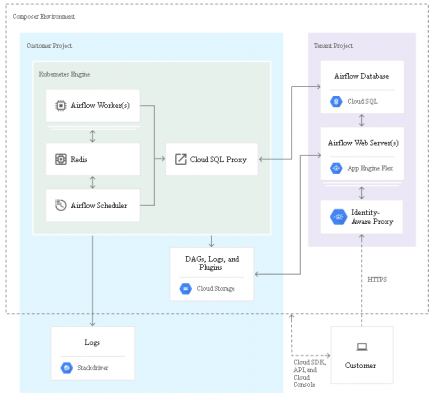

Cloud Composer distributes the environment’s resources between a Google-managed tenant project and a customer project, as

Tenant project resources

Tenant project resources

- For unified Cloud IAM, access control and data security, Cloud Composer deploys Cloud SQL and App Engine in the tenant project.

Cloud SQL

- Cloud SQL stores the Airflow metadata.

- Composer limits database access to the default or the specified custom service account used to create the environment.

- Composer backs up the Airflow metadata daily to minimize potential data loss.

- Only service account used to create the Composer environment can access data in the Cloud SQL database.

App Engine

- Its flexible environment hosts the Airflow web server.

- By default, the Airflow web server is integrated with Identity-Aware Proxy.

- Also enables you to use the Cloud Composer IAM policy to manage web server access.

- Composer also supports deploying a self-managed Airflow web server in the customer project.

Customer project resources

Composer deploys following in customer project.

- Cloud Storage: provides the storage bucket for staging DAGs, plugins, data dependencies, and logs.

- Google Kubernetes Engine: By default, Cloud Composer deploys core components—such as Airflow scheduler, worker nodes, and CeleryExecutor—in a GKE. Composer also supports VPC-native clusters using alias IPs.

- Redis, the message broker for the CeleryExecutor, runs as a StatefulSet application so that messages persist across container restarts.

- Cloud Logging and Cloud Monitoring: Composer integrates with Cloud Logging and Cloud Monitoring, to view all Airflow service and workflow logs.

Cloud Composer Environment Component

Components for each environment:

- Web server: The web server runs the Apache Airflow web interface, and Identity-Aware Proxy protects the interface.

- Database: The database holds the Apache Airflow metadata.

- Cloud Storage bucket: bucket stores the DAGs, logs, custom plugins, and data for the environment.

Airflow management:

Use following Airflow-native tools for management

- Web interface: Access Airflow web interface from the Google Cloud Console or by direct URL.

- Command line tools: run gcloud composer commands to issue Airflow command-line commands.

- Cloud Composer REST and RPC APIs

Airflow configuration:

- configurations Composer provides for Apache Airflow are the same as the configurations for a locally-hosted Airflow deployment.

- Some Airflow configurations are preconfigured

- cannot change the configuration properties.

- Other configurations, to be specified when creating or updating environment.

Airflow DAGs (workflows):

- An Apache Airflow DAG is a workflow: a collection of tasks with additional task dependencies.

- Cloud Storage used to store DAGs.

- To add or remove DAGs add or remove the DAGs from the Cloud Storage bucket

- Can schedule DAGs

- can trigger DAGs manually or in response to events

Plugins

- can install custom plugins, into Cloud Composer environment.

Python dependencies

- can install Python dependencies from Python Package Index.

Access control

- manage security at the Google Cloud project level

- assign Cloud IAM roles for control.

- Without appropriate Cloud Composer IAM role, no access to any of environments.

Logging and monitoring:

- can view Airflow logs that are associated with single DAG tasks

- View in the Airflow web interface and

- the logs folder in the associated Cloud Storage bucket.

- Streaming logs are available for Cloud Composer.

- access streaming logs in Logs Viewer in Google Cloud Console.

- Also has audit logs, such as Admin Activity audit logs, for Google Cloud projects.

Networking and security:

During environment creation, following configuration options available

- Cloud Composer environment with a route-based GKE cluster (default)

- Private IP Cloud Composer environment

- Cloud Composer environment with a VPC Native GKE cluster using alias IP addresses

- Shared VPC

Create a Project

To create a project and enable the Cloud Composer API:

- In the Cloud Console, select or create a project.

- Make sure that billing is enabled for project.

- To activate the Cloud Composer API in a new or existing project, go to the API Overview page for Cloud Composer.

- Click Enable.

Cloud Composer Versioning

- Airflow follows the semantical software versioning schema.

- Composer supports last two stable minor Airflow releases and latest two patch versions for those minor releases.

Google Professional Data Engineer (GCP) Free Practice TestTake a Quiz